Setup

! apt- get install tree - qq! pip install -- upgrade git+ https:// github.com/ keras- team/ keras- cv - qq! pip install git+ https:// github.com/ divamgupta/ image- segmentation- keras - qq! pip install git+ https:// github.com/ cleanlab/ cleanvision.git - qq! pip install cleanlab - qq! pip install scikeras - qq

Installing build dependencies ... done

Getting requirements to build wheel ... done

Preparing metadata (pyproject.toml) ... done

Looking in indexes: https://pypi.org/simple, https://us-python.pkg.dev/colab-wheels/public/simple/

Collecting git+https://github.com/divamgupta/image-segmentation-keras

Cloning https://github.com/divamgupta/image-segmentation-keras to /tmp/pip-req-build-zzw3x8ac

Running command git clone --filter=blob:none --quiet https://github.com/divamgupta/image-segmentation-keras /tmp/pip-req-build-zzw3x8ac

Resolved https://github.com/divamgupta/image-segmentation-keras to commit 750a44ca16c0ca3355c9486026377a239635df4d

Preparing metadata (setup.py) ... done

Collecting h5py<=2.10.0

Downloading h5py-2.10.0.tar.gz (301 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 301.1/301.1 kB 22.6 MB/s eta 0:00:00

Preparing metadata (setup.py) ... done

Requirement already satisfied: Keras>=2.0.0 in /usr/local/lib/python3.10/dist-packages (from keras-segmentation==0.3.0) (2.12.0)

Collecting imageio==2.5.0

Downloading imageio-2.5.0-py3-none-any.whl (3.3 MB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 3.3/3.3 MB 92.1 MB/s eta 0:00:00

Requirement already satisfied: imgaug>=0.4.0 in /usr/local/lib/python3.10/dist-packages (from keras-segmentation==0.3.0) (0.4.0)

Requirement already satisfied: opencv-python in /usr/local/lib/python3.10/dist-packages (from keras-segmentation==0.3.0) (4.7.0.72)

Requirement already satisfied: tqdm in /usr/local/lib/python3.10/dist-packages (from keras-segmentation==0.3.0) (4.65.0)

Requirement already satisfied: pillow in /usr/local/lib/python3.10/dist-packages (from imageio==2.5.0->keras-segmentation==0.3.0) (9.5.0)

Requirement already satisfied: numpy in /usr/local/lib/python3.10/dist-packages (from imageio==2.5.0->keras-segmentation==0.3.0) (1.22.4)

Requirement already satisfied: six in /usr/local/lib/python3.10/dist-packages (from h5py<=2.10.0->keras-segmentation==0.3.0) (1.16.0)

Requirement already satisfied: scipy in /usr/local/lib/python3.10/dist-packages (from imgaug>=0.4.0->keras-segmentation==0.3.0) (1.10.1)

Requirement already satisfied: matplotlib in /usr/local/lib/python3.10/dist-packages (from imgaug>=0.4.0->keras-segmentation==0.3.0) (3.7.1)

Requirement already satisfied: scikit-image>=0.14.2 in /usr/local/lib/python3.10/dist-packages (from imgaug>=0.4.0->keras-segmentation==0.3.0) (0.19.3)

Requirement already satisfied: Shapely in /usr/local/lib/python3.10/dist-packages (from imgaug>=0.4.0->keras-segmentation==0.3.0) (2.0.1)

Requirement already satisfied: packaging>=20.0 in /usr/local/lib/python3.10/dist-packages (from scikit-image>=0.14.2->imgaug>=0.4.0->keras-segmentation==0.3.0) (23.1)

Requirement already satisfied: networkx>=2.2 in /usr/local/lib/python3.10/dist-packages (from scikit-image>=0.14.2->imgaug>=0.4.0->keras-segmentation==0.3.0) (3.1)

Requirement already satisfied: PyWavelets>=1.1.1 in /usr/local/lib/python3.10/dist-packages (from scikit-image>=0.14.2->imgaug>=0.4.0->keras-segmentation==0.3.0) (1.4.1)

Requirement already satisfied: tifffile>=2019.7.26 in /usr/local/lib/python3.10/dist-packages (from scikit-image>=0.14.2->imgaug>=0.4.0->keras-segmentation==0.3.0) (2023.4.12)

Requirement already satisfied: fonttools>=4.22.0 in /usr/local/lib/python3.10/dist-packages (from matplotlib->imgaug>=0.4.0->keras-segmentation==0.3.0) (4.39.3)

Requirement already satisfied: pyparsing>=2.3.1 in /usr/local/lib/python3.10/dist-packages (from matplotlib->imgaug>=0.4.0->keras-segmentation==0.3.0) (3.0.9)

Requirement already satisfied: cycler>=0.10 in /usr/local/lib/python3.10/dist-packages (from matplotlib->imgaug>=0.4.0->keras-segmentation==0.3.0) (0.11.0)

Requirement already satisfied: contourpy>=1.0.1 in /usr/local/lib/python3.10/dist-packages (from matplotlib->imgaug>=0.4.0->keras-segmentation==0.3.0) (1.0.7)

Requirement already satisfied: kiwisolver>=1.0.1 in /usr/local/lib/python3.10/dist-packages (from matplotlib->imgaug>=0.4.0->keras-segmentation==0.3.0) (1.4.4)

Requirement already satisfied: python-dateutil>=2.7 in /usr/local/lib/python3.10/dist-packages (from matplotlib->imgaug>=0.4.0->keras-segmentation==0.3.0) (2.8.2)

Building wheels for collected packages: keras-segmentation, h5py

Building wheel for keras-segmentation (setup.py) ... done

Created wheel for keras-segmentation: filename=keras_segmentation-0.3.0-py3-none-any.whl size=34600 sha256=aadaccbaef653b5efcf3662e28dceba20aa03fed84c0fd59136fe6b5d5e65d85

Stored in directory: /tmp/pip-ephem-wheel-cache-atuxzteb/wheels/c3/c0/74/d7b2d21081981b49c0aafed6ff4c00531781dbffd31391799c

Building wheel for h5py (setup.py) ... done

Created wheel for h5py: filename=h5py-2.10.0-cp310-cp310-linux_x86_64.whl size=5620021 sha256=cc4f9c1ae4db2cdf80e9b00669bd688acb166148c0d4800d418e5177eb06e1c3

Stored in directory: /root/.cache/pip/wheels/21/bc/58/0d0c6056e1339f40188d136cd838c6554d9c17545196dd9110

Successfully built keras-segmentation h5py

Installing collected packages: imageio, h5py, keras-segmentation

Attempting uninstall: imageio

Found existing installation: imageio 2.25.1

Uninstalling imageio-2.25.1:

Successfully uninstalled imageio-2.25.1

Attempting uninstall: h5py

Found existing installation: h5py 3.8.0

Uninstalling h5py-3.8.0:

Successfully uninstalled h5py-3.8.0

Successfully installed h5py-2.10.0 imageio-2.5.0 keras-segmentation-0.3.0

Unable to display output for mime type(s): application/vnd.colab-display-data+json

Installing build dependencies ... done

Getting requirements to build wheel ... done

Preparing metadata (pyproject.toml) ... done

We need to restart the environment after installing.

# Python ≥3.7 is recommended import sysassert sys.version_info >= (3 , 7 )import osfrom pathlib import Pathfrom time import strftime# Scikit-Learn ≥1.01 is recommended from packaging import versionimport sklearnfrom sklearn.datasets import load_sample_imagefrom sklearn.datasets import load_sample_imagesfrom sklearn.datasets import fetch_openmlfrom sklearn.metrics import accuracy_scorefrom sklearn.model_selection import cross_val_predictassert version.parse(sklearn.__version__) >= version.parse("1.0.1" )# Tensorflow ≥2.8.0 is recommended import tensorflow as tfimport tensorflow_datasets as tfdsfrom tensorflow.keras.utils import image_dataset_from_directoryfrom tensorflow.keras import optimizersimport keras_cvfrom keras_cv import bounding_boxfrom keras_cv import visualizationfrom keras_segmentation.models.unet import vgg_unetassert version.parse(tf.__version__) >= version.parse("2.8.0" )# Image augmentation import albumentations as A# Data centric AI from cleanvision.imagelab import Imagelabfrom cleanlab.filter import find_label_issuesfrom scikeras.wrappers import KerasClassifier, KerasRegressor# Common imports import numpy as npimport osimport shutilimport pathlibimport resourcefrom functools import partialimport tqdmimport cv2# To plot pretty figures % matplotlib inlineimport matplotlib.pyplot as pltimport matplotlib as mpl'font' , size= 14 )'axes' , labelsize= 14 , titlesize= 14 )'legend' , fontsize= 14 )'xtick' , labelsize= 10 )'ytick' , labelsize= 10 )# to make this notebook's output stable across runs 42 )42 )

if not tf.config.list_physical_devices('GPU' ):print ("No GPU was detected. Neural nets can be very slow without a GPU." )if "google.colab" in sys.modules:print ("Go to Runtime > Change runtime and select a GPU hardware " "accelerator." )if "kaggle_secrets" in sys.modules:print ("Go to Settings > Accelerator and select GPU." )

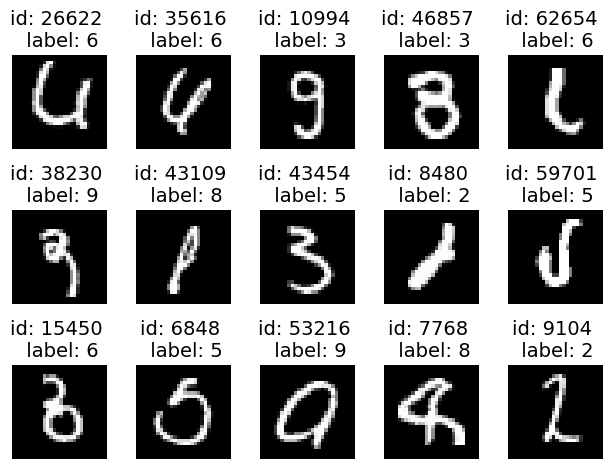

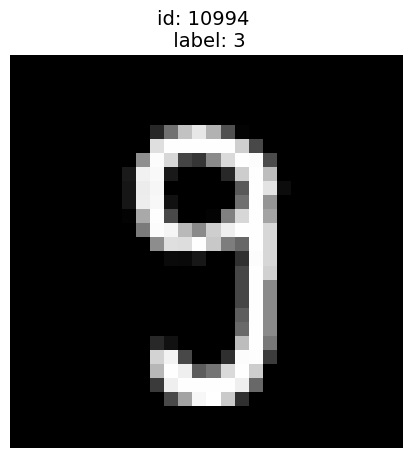

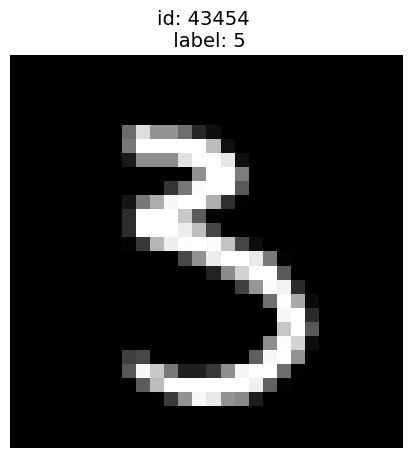

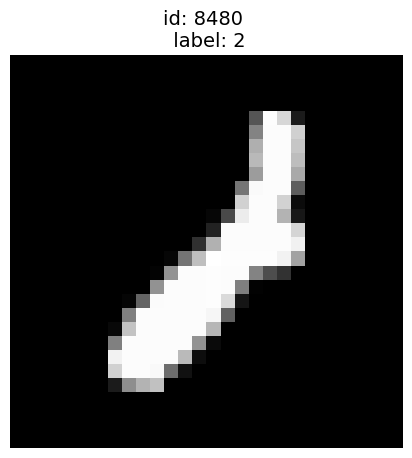

A couple utility functions to plot grayscale and RGB images:

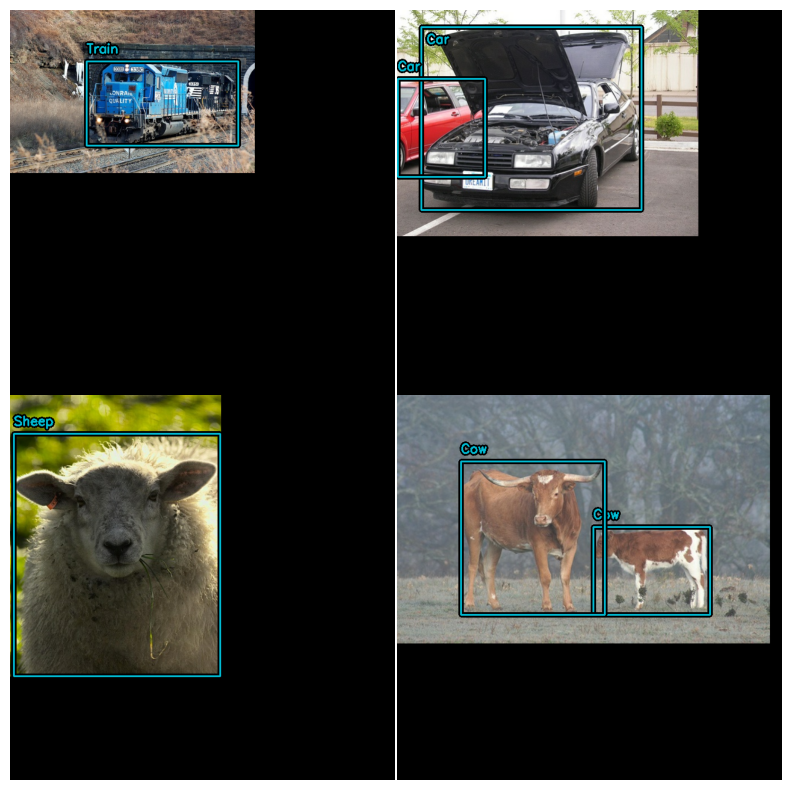

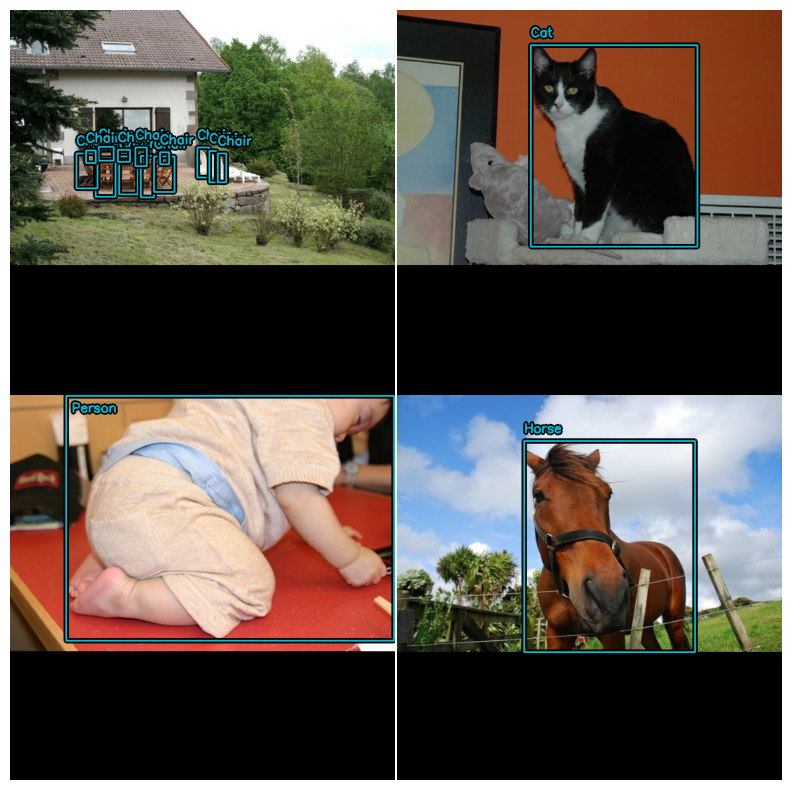

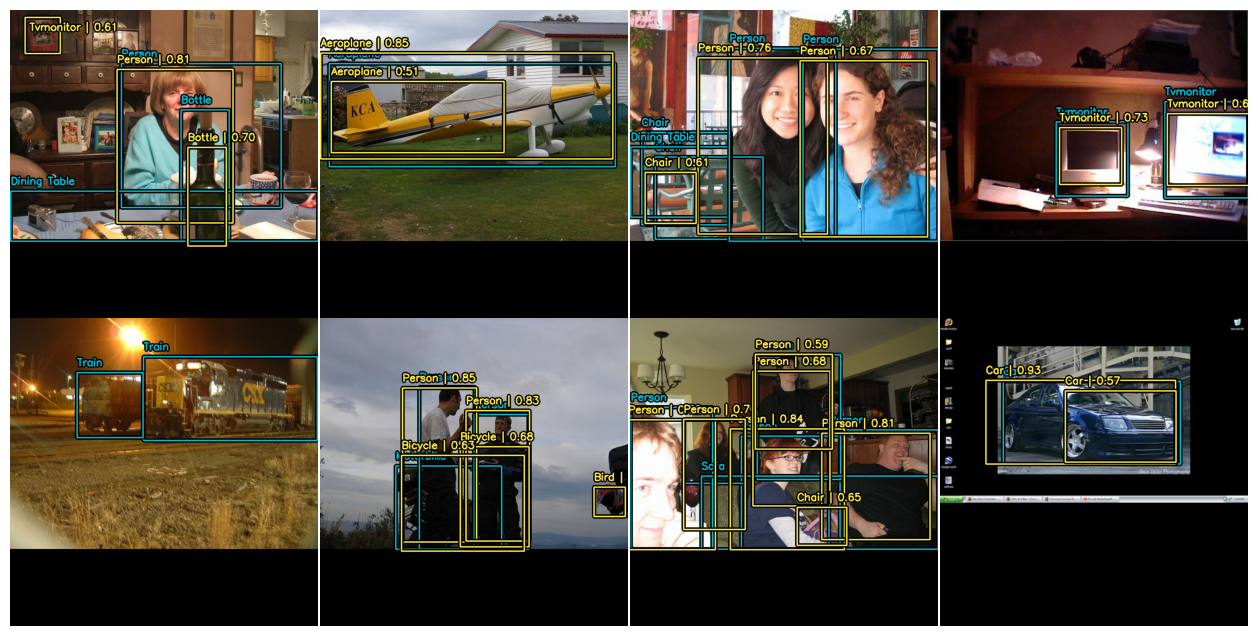

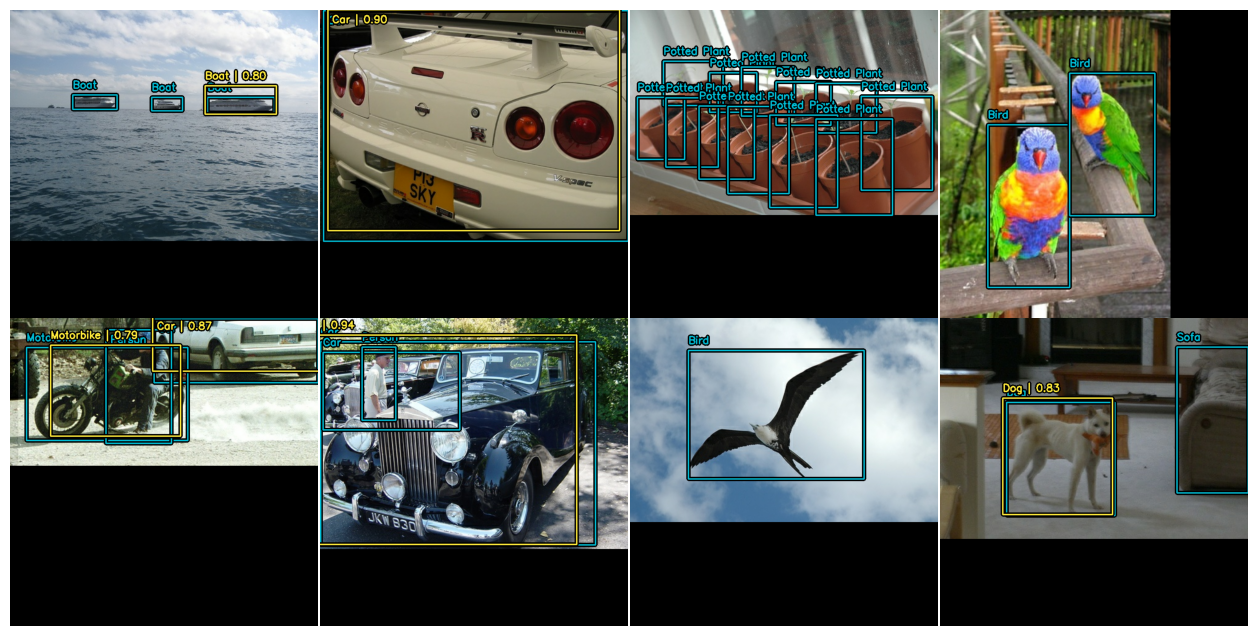

def plot_image(image):= "gray" , interpolation= "nearest" )"off" )def plot_color_image(image):= "nearest" )"off" )def plot_examples(id_iter, nrows= 1 , ncols= 1 ):for count, id in enumerate (id_iter):+ 1 )id ].reshape(28 , 28 ), cmap= "gray" )f"id: { id } \n label: { labels[id ]} " )"off" )= 2.0 )# Class mapping for pascalvoc = ["Aeroplane" ,"Bicycle" ,"Bird" ,"Boat" ,"Bottle" ,"Bus" ,"Car" ,"Cat" ,"Chair" ,"Cow" ,"Dining Table" ,"Dog" ,"Horse" ,"Motorbike" ,"Person" ,"Potted Plant" ,"Sheep" ,"Sofa" ,"Train" ,"Tvmonitor" ,"Total" ,= dict (zip (range (len (class_ids)), class_ids))def visualize_dataset(inputs, value_range, rows, cols, bounding_box_format):= next (iter (inputs.take(1 )))= inputs["images" ], inputs["bounding_boxes" ]= value_range,= rows,= cols,= bounding_boxes,= 5 ,= 0.7 ,= bounding_box_format,= class_mapping,def unpackage_raw_tfds_inputs(inputs, bounding_box_format):= inputs["image" ]= keras_cv.bounding_box.convert_format("objects" ]["bbox" ],= image,= "rel_yxyx" ,= bounding_box_format,= {"classes" : tf.cast(inputs["objects" ]["label" ], dtype= tf.float32),"boxes" : tf.cast(boxes, dtype= tf.float32),return {"images" : tf.cast(image, tf.float32), "bounding_boxes" : bounding_boxes}def load_pascal_voc(split, dataset, bounding_box_format):= tfds.load(dataset, split= split, with_info= False , shuffle_files= True )= ds.map (lambda x: unpackage_raw_tfds_inputs(x, bounding_box_format= bounding_box_format),= tf.data.AUTOTUNE,return dsdef visualize_detections(model, dataset, bounding_box_format):= next (iter (dataset.take(1 )))= model.predict(images)= bounding_box.to_ragged(y_pred)= (0 , 255 ),= bounding_box_format,= y_true,= y_pred,= 4 ,= 2 ,= 4 ,= True ,= 0.7 ,= class_mapping,

What is a Convolution?

A neuron’s weights can be represented as a small image the size of the receptive field. For example, below shows two possible sets of weights, called filters (or convolution kernels). In TensorFlow, each input image is typically represented as a 3D tensor of shape [height, width, channels]. A mini-batch is represented as a 4D tensor of shape [mini-batch size, height, width, channels]. The weights of a convolutional layer are represented as a 4D tensor of shape [fh, fw, fn', fn]. The bias terms of a convolutional layer are simply represented as a 1D tensor of shape [fn].

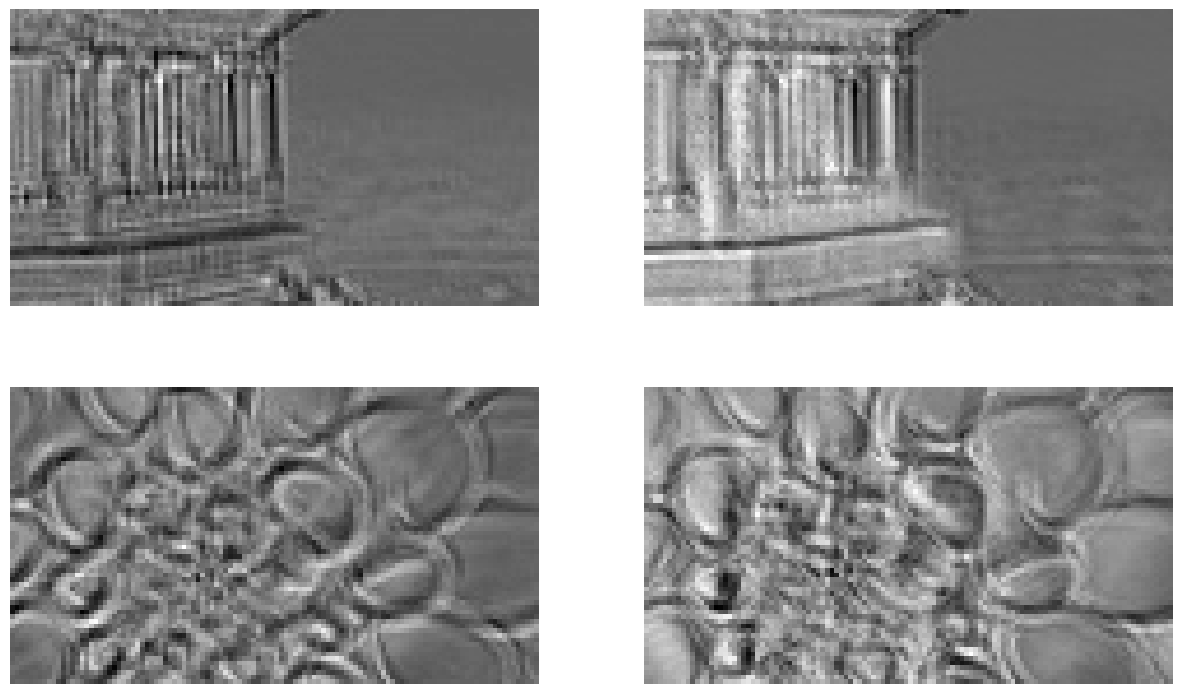

Let’s look at a simple example. The following code loads two sample images, using Scikit-Learn’s load_sample_images() (which loads two color images, one of a Chinese temple, and the other of a flower). The pixel intensities (for each color channel) is represented as a byte from 0 to 255, so we scale these features simply by dividing by 255, to get floats ranging from 0 to 1. Then we create two 7 × 7 filters (one with a vertical white line in the middle, and the other with a horizontal white line in the middle).

# Load sample images = load_sample_image("china.jpg" ) / 255 = load_sample_image("flower.jpg" ) / 255 = np.array([china, flower])= images.shape# Create 2 filters = np.zeros(shape= (7 , 7 , channels, 2 ), dtype= np.float32) # [height, width, channel of inputs, channel of feature maps] 3 , :, 0 ] = 1 # vertical line 3 , :, :, 1 ] = 1 # horizontal line

0 , 0 ])0 , 1 ])

Now if all neurons in a layer use the same vertical line filter (and the same bias term), and you feed the network with the image, the layer will output a feature maps. Here, we apply them to both images using the tf.nn.conv2d() function, which is part of TensorFlow’s low-level Deep Learning API. In this example, we use zero padding (padding="SAME") and a stride of 1

The output is a 4D tensor. The dimensions are: batch size, height, width, channels. The first dimension (batch size) is 2 since there are 2 input images. The next two dimensions are the height and width of the output feature maps: since padding="SAME" and strides=1, the output feature maps have the same height and width as the input images (in this case, 427×640). Lastly, this convolutional layer has 2 filters, so the last dimension is 2: there are 2 output feature maps per input image.

= tf.nn.conv2d(images, filters, strides= 1 , padding= "SAME" )# [batches, height, width, channel of feature maps]

TensorShape([2, 427, 640, 2])

def crop(images):return images[150 :220 , 130 :250 ] #crop for better visulization 0 , :, :, 0 ]))for feature_map_index, filename in enumerate (["china_vertical" , "china_horizontal" ]):0 , :, :, feature_map_index]))

Notice that the vertical white lines get enhanced in one feature map while the rest gets blurred. Similarly, the other feature map is what you get if all neurons use the same horizontal line filter; notice that the horizontal white lines get enhanced while the rest is blurred out. Thus, a layer full of neurons using the same filter outputs a feature map, which highlights the areas in an image that activate the filter the most. Of course you do not have to define the filters manually: instead, during training the convolutional layer will automatically learn the most useful filters for its task, and the layers above will learn to combine them into more complex patterns.

Convolutional Layer

Instead of manually creating the variables, however, you can simply use the tf.keras.layers.Conv2D layer. The code below creates a Conv2D layer with 32 filters, each 7 × 7, using a stride of 1 (both horizontally and vertically), VALID padding, and applying the linear activation function to its outputs. As you can see, convolutional layers have quite a few hyperparameters: you must choose the number of filters, their height and width, the strides, and the padding type. As always, you can use cross-validation to find the right hyperparameter values, but this is very time-consuming. We will discuss common CNN architectures later, to give you some idea of what hyperparameter values work best in practice.

= load_sample_images()["images" ]= tf.keras.layers.CenterCrop(height= 70 , width= 120 )(images) # Functional API = tf.keras.layers.Rescaling(scale= 1 / 255 )(images)

TensorShape([2, 70, 120, 3])

Let’s call this layer, passing it the two test images:

= tf.keras.layers.Conv2D(filters= 32 , kernel_size= 7 )= conv_layer(images)

TensorShape([2, 64, 114, 32])

{'name': 'conv2d',

'trainable': True,

'dtype': 'float32',

'filters': 32,

'kernel_size': (7, 7),

'strides': (1, 1),

'padding': 'valid',

'data_format': 'channels_last',

'dilation_rate': (1, 1),

'groups': 1,

'activation': 'linear',

'use_bias': True,

'kernel_initializer': {'class_name': 'GlorotUniform',

'config': {'seed': None}},

'bias_initializer': {'class_name': 'Zeros', 'config': {}},

'kernel_regularizer': None,

'bias_regularizer': None,

'activity_regularizer': None,

'kernel_constraint': None,

'bias_constraint': None}

The height and width have both shrunk by 6 pixels. This is due to the fact that the Conv2D layer does not use any zero-padding by default, which means that we lose a few pixels on the sides of the output feature maps, depending on the size of the filters. Since the filters are initialized randomly, they’ll initially detect random patterns. Let’s take a look at the 2 output features maps for each image:

= (15 , 9 ))for image_idx in (0 , 1 ):for fmap_idx in (0 , 1 ):2 , 2 , image_idx * 2 + fmap_idx + 1 )= "gray" )"off" )

As you can see, randomly generated filters typically act like edge detectors, which is great since that’s a useful tool in image processing, and that’s the type of filters that a convolutional layer typically starts with. Then, during training, it gradually learns improved filters to recognize useful patterns for the task.

If instead we set padding="same", then the inputs are padded with enough zeros on all sides to ensure that the output feature maps end up with the same size as the inputs (hence the name of this option):

= tf.keras.layers.Conv2D(filters= 32 , kernel_size= 7 , padding= "same" )= conv_layer(images)

TensorShape([2, 70, 120, 32])

If the stride is greater than 1 (in any direction), then the output size will not be equal to the input size, even if padding="same". For example, if you set strides=2 (or equivalently strides=(2, 2)), then the output feature maps will be 35 × 60:

= tf.keras.layers.Conv2D(filters= 32 , kernel_size= 7 , padding= "same" , strides= 2 )= conv_layer(images)

TensorShape([2, 35, 60, 32])

# This utility function can be useful to compute the size of the # feature maps output by a convolutional layer. It also returns # the number of ignored rows or columns if padding="valid", or the # number of zero-padded rows or columns if padding="same". def conv_output_size(input_size, kernel_size, strides= 1 , padding= "valid" ):if padding== "valid" := input_size - kernel_size + strides= z // strides= z % stridesreturn output_size, num_ignoredelse := (input_size - 1 ) // strides + 1 = (output_size - 1 ) * strides + kernel_size - input_sizereturn output_size, num_padded70 , 120 ]), kernel_size= 7 , strides= 2 , padding= "same" )

(array([35, 60]), array([5, 5]))

Just like a Dense layer, a Conv2D layer holds all the layer’s weights, including the kernels and biases. The kernels are initialized randomly, while the biases are initialized to zero. These weights are accessible as TF variables via the weights attribute, or as NumPy arrays via the get_weights() method:

= conv_layer.get_weights()

You can find other useful kernels here https://setosa.io/ev/image-kernels/

Pooling layer

Max pooling

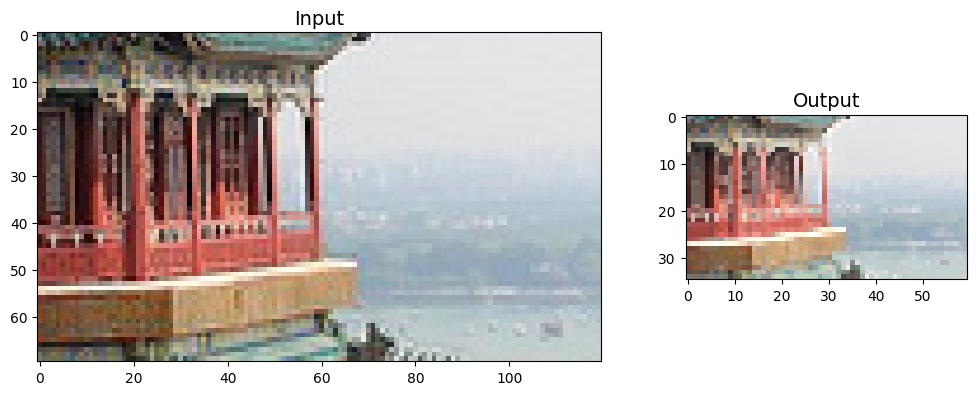

Implementing a max pooling layer in TensorFlow is quite easy. The following code creates a max pooling layer using a 2 × 2 kernel. The strides default to the kernel size , so this layer will use a stride of 2 (both horizontally and vertically). By default, it uses VALID padding (i.e., no padding at all):

= tf.keras.layers.MaxPool2D(pool_size= 2 )

= max_pool(images)

= plt.figure(figsize= (12 , 8 ))= mpl.gridspec.GridSpec(nrows= 1 , ncols= 2 , width_ratios= [2 , 1 ])= fig.add_subplot(gs[0 , 0 ])"Input" )0 ]) # plot the 1st image = fig.add_subplot(gs[0 , 1 ])"Output" )0 ]) # plot the output for the 1st image

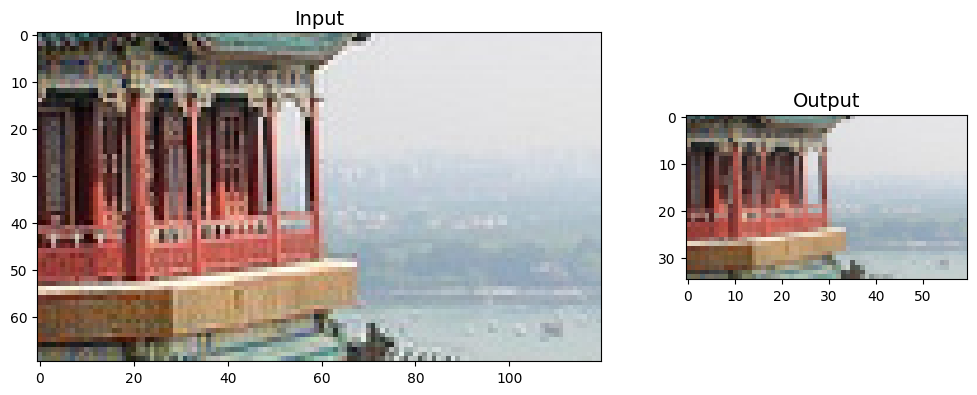

Average pooling

To create an average pooling layer, just use AvgPool2D instead of MaxPool2D. As you might expect, it works exactly like a max pooling layer, except it computes the mean rather than the max.

= tf.keras.layers.AvgPool2D(pool_size= 2 )

= avg_pool(images)

= plt.figure(figsize= (12 , 8 ))= mpl.gridspec.GridSpec(nrows= 1 , ncols= 2 , width_ratios= [2 , 1 ])= fig.add_subplot(gs[0 , 0 ])"Input" )0 ]) # plot the 1st image = fig.add_subplot(gs[0 , 1 ])"Output" )0 ]) # plot the output for the 1st image

Depthwise pooling

Note that max pooling and average pooling can be performed along the depth dimension instead of the spatial dimensions, although it’s not as common. This can allow the CNN to learn to be invariant to various features. For example, it could learn multiple filters, each detecting a different rotation of the same pattern, and the depthwise max pooling layer would ensure that the output is the same regardless of the rotation. The CNN could similarly learn to be invariant to anything: thickness, brightness, skew, color, and so on.

Keras does not include a depthwise max pooling layer, but it’s not too difficult to implement a custom layer for that:

class DepthPool(tf.keras.layers.Layer):def __init__ (self , pool_size= 2 , ** kwargs):super ().__init__ (** kwargs)self .pool_size = pool_sizedef call(self , inputs):= tf.shape(inputs) # shape[-1] is the number of channels = shape[- 1 ] // self .pool_size # number of channel groups = tf.concat([shape[:- 1 ], [groups, self .pool_size]], axis= 0 )return tf.reduce_max(tf.reshape(inputs, new_shape), axis=- 1 )

= DepthPool(pool_size= 3 )(images)print (depth_output.shape)= (12 , 8 ))1 , 2 , 1 )"Input" )0 ]) # plot the 1st image "off" )1 , 2 , 2 )"Output" )0 , ..., 0 ], cmap= "gray" ) # plot 1st image's output "off" )

Global Average Pooling

One last type of pooling layer that you will often see in modern architectures is the global average pooling layer. It works very differently: all it does is compute the mean of each entire feature map (it’s like an average pooling layer using a pooling kernel with the same spatial dimensions as the inputs). This means that it just outputs a single number per feature map and per instance. Although this is of course extremely destructive (most of the information in the feature map is lost), it can be useful as the output layer. To create such a layer, simply use the tf.keras.layers.GlobalAvgPool2D class:

= tf.keras.layers.GlobalAvgPool2D()

TensorShape([2, 70, 120, 3])

# It is the same as using low level API to perform reduction = tf.keras.layers.Lambda(lambda X: tf.reduce_mean(X, axis= [1 , 2 ]))

<tf.Tensor: shape=(2, 3), dtype=float32, numpy=

array([[0.643388 , 0.59718215, 0.5825038 ],

[0.7630747 , 0.2601088 , 0.10848834]], dtype=float32)>

Now you know all the building blocks to create a convolutional neural network. Let’s see how to assemble them.

Tackling Fashion MNIST With a CNN

Before delving into the code, you can go through https://poloclub.github.io/cnn-explainer/ to make sure you understand every piece of CNN.

Typical CNN architectures stack a few convolutional layers (each one generally followed by a ReLU layer), then a pooling layer, then another few convolutional layers (+ReLU), then another pooling layer, and so on. The image gets smaller and smaller as it progresses through the network, but it also typically gets deeper and deeper (i.e.,with more feature maps) thanks to the convolutional layers. At the top of the stack, a regular feedforward neural network is added, composed of a few fully connected layers (+ReLUs), and the final layer outputs the prediction (e.g., a softmax layer that outputs estimated class probabilities).

Here is how you can implement a simple CNN to tackle the fashion MNIST dataset

= tf.keras.datasets.fashion_mnist.load_data()= mnist= np.expand_dims(X_train_full, axis=- 1 ).astype(np.float32) / 255 = np.expand_dims(X_test.astype(np.float32), axis=- 1 ) / 255 = X_train_full[:- 5000 ], X_train_full[- 5000 :]= y_train_full[:- 5000 ], y_train_full[- 5000 :]

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/train-labels-idx1-ubyte.gz

29515/29515 [==============================] - 0s 0us/step

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/train-images-idx3-ubyte.gz

26421880/26421880 [==============================] - 0s 0us/step

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/t10k-labels-idx1-ubyte.gz

5148/5148 [==============================] - 0s 0us/step

Downloading data from https://storage.googleapis.com/tensorflow/tf-keras-datasets/t10k-images-idx3-ubyte.gz

4422102/4422102 [==============================] - 0s 0us/step

((55000, 28, 28, 1), (5000, 28, 28, 1), (10000, 28, 28, 1))

42 ) = partial(tf.keras.layers.Conv2D, kernel_size= 3 , padding= "same" , activation= "relu" , kernel_initializer= "he_normal" )= tf.keras.Sequential([= 32 , kernel_size= 7 , input_shape= [28 , 28 , 1 ]),= 64 ),= 64 ),= 128 ),= 128 ),= 64 , activation= "relu" , kernel_initializer= "he_normal" ),0.5 ),= 32 , activation= "relu" , kernel_initializer= "he_normal" ),0.5 ),= 10 , activation= "softmax" )

In this code, we start by using the partial() function to define a thin wrapper around the Conv2D class, called DefaultConv2D: it simply avoids having to repeat the same hyperparameter values over and over again.

The first layer sets input_shape=[28, 28, 1], which means the images are 28 × 28 pixels, with a single color channel (i.e., grayscale).

Next, we have a max pooling layer, which divides each spatial dimension by a factor of two (since pool_size=2).

Then we repeat the same structure twice: convolutional layers followed by a max pooling layer. For larger images, we could repeat this structure several times (the number of repetitions is a hyperparameter you can tune).

Note that the number of filters grows as we climb up the CNN towards the output layer (it is initially 32, then 64, then 128): it makes sense for it to grow in the image setting, since the number of low level features is often fairly low (e.g., small circles, horizontal lines, etc.), but there are many different ways to combine them into higher level features. It is a common practice to double the number of filters after each pooling layer: since a pooling layer divides each spatial dimension by a factor of 2 , we can afford doubling the number of feature maps in the next layer, without fear of exploding the number of parameters, memory usage, or computational load.

Next is the fully connected network, composed of 1 hidden dense layers and a dense output layer. Note that we must flatten its inputs, since a dense network expects a 1D array of features for each instance . We also add two dropout layers, with a dropout rate of 50% each, to reduce overfitting.

Model: "sequential_4"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_23 (Conv2D) (None, 28, 28, 32) 1600

max_pooling2d_13 (MaxPoolin (None, 14, 14, 32) 0

g2D)

conv2d_24 (Conv2D) (None, 14, 14, 64) 18496

conv2d_25 (Conv2D) (None, 14, 14, 64) 36928

max_pooling2d_14 (MaxPoolin (None, 7, 7, 64) 0

g2D)

conv2d_26 (Conv2D) (None, 7, 7, 128) 73856

conv2d_27 (Conv2D) (None, 7, 7, 128) 147584

max_pooling2d_15 (MaxPoolin (None, 3, 3, 128) 0

g2D)

flatten_4 (Flatten) (None, 1152) 0

dense_10 (Dense) (None, 64) 73792

dropout_6 (Dropout) (None, 64) 0

dense_11 (Dense) (None, 32) 2080

dropout_7 (Dropout) (None, 32) 0

dense_12 (Dense) (None, 10) 330

=================================================================

Total params: 354,666

Trainable params: 354,666

Non-trainable params: 0

_________________________________________________________________

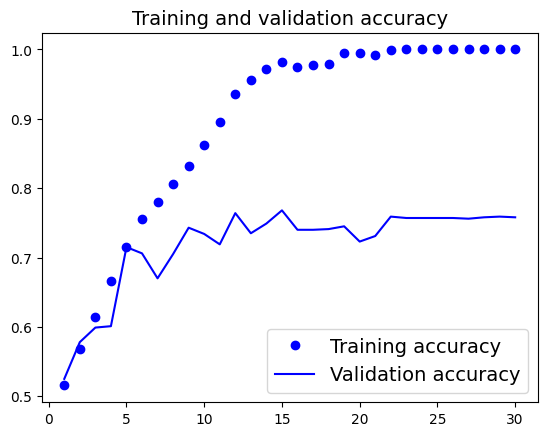

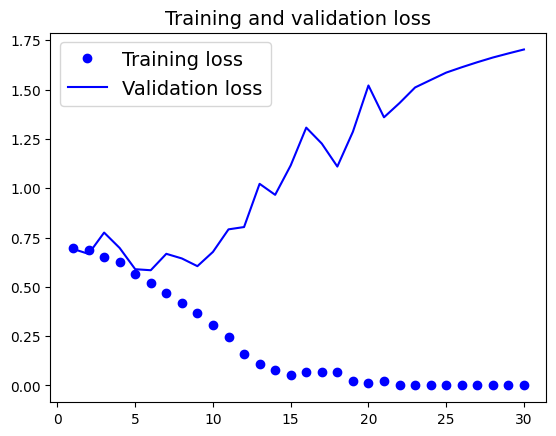

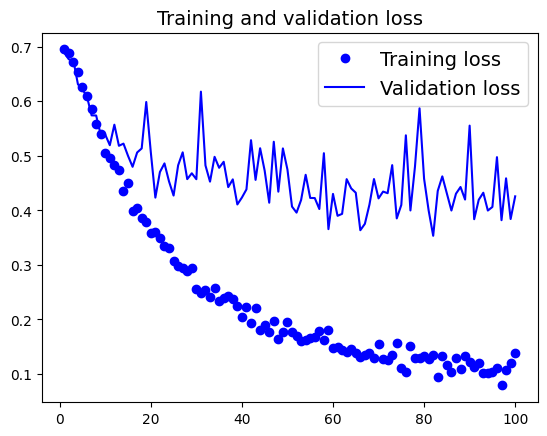

compile (loss= "sparse_categorical_crossentropy" , optimizer= "nadam" , metrics= ["accuracy" ])= model.fit(X_train, y_train, epochs= 30 , validation_data= (X_valid, y_valid))

Epoch 1/30

1719/1719 [==============================] - 18s 8ms/step - loss: 1.0470 - accuracy: 0.6161 - val_loss: 0.5400 - val_accuracy: 0.8344

Epoch 2/30

1719/1719 [==============================] - 13s 7ms/step - loss: 0.6715 - accuracy: 0.7635 - val_loss: 0.4153 - val_accuracy: 0.8658

Epoch 3/30

1719/1719 [==============================] - 13s 7ms/step - loss: 0.5936 - accuracy: 0.7930 - val_loss: 0.3833 - val_accuracy: 0.8806

Epoch 4/30

1719/1719 [==============================] - 13s 8ms/step - loss: 0.5398 - accuracy: 0.8111 - val_loss: 0.3692 - val_accuracy: 0.8764

Epoch 5/30

1719/1719 [==============================] - 13s 7ms/step - loss: 0.4998 - accuracy: 0.8251 - val_loss: 0.3740 - val_accuracy: 0.8748

Epoch 6/30

1719/1719 [==============================] - 17s 10ms/step - loss: 0.4701 - accuracy: 0.8372 - val_loss: 0.3260 - val_accuracy: 0.8892

Epoch 7/30

1719/1719 [==============================] - 16s 9ms/step - loss: 0.4427 - accuracy: 0.8451 - val_loss: 0.3160 - val_accuracy: 0.8908

Epoch 8/30

1719/1719 [==============================] - 13s 8ms/step - loss: 0.4219 - accuracy: 0.8521 - val_loss: 0.2874 - val_accuracy: 0.9014

Epoch 9/30

1719/1719 [==============================] - 13s 8ms/step - loss: 0.3958 - accuracy: 0.8623 - val_loss: 0.2849 - val_accuracy: 0.9066

Epoch 10/30

1719/1719 [==============================] - 13s 8ms/step - loss: 0.3818 - accuracy: 0.8667 - val_loss: 0.3108 - val_accuracy: 0.8972

Epoch 11/30

1719/1719 [==============================] - 13s 8ms/step - loss: 0.3664 - accuracy: 0.8715 - val_loss: 0.2787 - val_accuracy: 0.9030

Epoch 12/30

1719/1719 [==============================] - 13s 8ms/step - loss: 0.3619 - accuracy: 0.8742 - val_loss: 0.3005 - val_accuracy: 0.9004

Epoch 13/30

1719/1719 [==============================] - 12s 7ms/step - loss: 0.3500 - accuracy: 0.8773 - val_loss: 0.2992 - val_accuracy: 0.9088

Epoch 14/30

1719/1719 [==============================] - 13s 8ms/step - loss: 0.3400 - accuracy: 0.8807 - val_loss: 0.2820 - val_accuracy: 0.9078

Epoch 15/30

1719/1719 [==============================] - 13s 7ms/step - loss: 0.3359 - accuracy: 0.8833 - val_loss: 0.3579 - val_accuracy: 0.9048

Epoch 16/30

1719/1719 [==============================] - 13s 7ms/step - loss: 0.3350 - accuracy: 0.8842 - val_loss: 0.2880 - val_accuracy: 0.9076

Epoch 17/30

1719/1719 [==============================] - 13s 8ms/step - loss: 0.3248 - accuracy: 0.8901 - val_loss: 0.3157 - val_accuracy: 0.8962

Epoch 18/30

1719/1719 [==============================] - 13s 8ms/step - loss: 0.3198 - accuracy: 0.8896 - val_loss: 0.3295 - val_accuracy: 0.9062

Epoch 19/30

1719/1719 [==============================] - 13s 8ms/step - loss: 0.3058 - accuracy: 0.8958 - val_loss: 0.3126 - val_accuracy: 0.9074

Epoch 20/30

1719/1719 [==============================] - 14s 8ms/step - loss: 0.3021 - accuracy: 0.8959 - val_loss: 0.3221 - val_accuracy: 0.8972

Epoch 21/30

1719/1719 [==============================] - 13s 8ms/step - loss: 0.2921 - accuracy: 0.8996 - val_loss: 0.3122 - val_accuracy: 0.9138

Epoch 22/30

1719/1719 [==============================] - 13s 7ms/step - loss: 0.2986 - accuracy: 0.8953 - val_loss: 0.2931 - val_accuracy: 0.9116

Epoch 23/30

1719/1719 [==============================] - 13s 8ms/step - loss: 0.2828 - accuracy: 0.9005 - val_loss: 0.3284 - val_accuracy: 0.9086

Epoch 24/30

1719/1719 [==============================] - 13s 8ms/step - loss: 0.2890 - accuracy: 0.9011 - val_loss: 0.3160 - val_accuracy: 0.9124

Epoch 25/30

1719/1719 [==============================] - 14s 8ms/step - loss: 0.2779 - accuracy: 0.9053 - val_loss: 0.3769 - val_accuracy: 0.8990

Epoch 26/30

1719/1719 [==============================] - 19s 11ms/step - loss: 0.2713 - accuracy: 0.9065 - val_loss: 0.3671 - val_accuracy: 0.8980

Epoch 27/30

1719/1719 [==============================] - 13s 8ms/step - loss: 0.2864 - accuracy: 0.9028 - val_loss: 0.3430 - val_accuracy: 0.9148

Epoch 28/30

1719/1719 [==============================] - 13s 8ms/step - loss: 0.2609 - accuracy: 0.9117 - val_loss: 0.3525 - val_accuracy: 0.9114

Epoch 29/30

1719/1719 [==============================] - 13s 7ms/step - loss: 0.2644 - accuracy: 0.9112 - val_loss: 0.3372 - val_accuracy: 0.9114

Epoch 30/30

1719/1719 [==============================] - 13s 7ms/step - loss: 0.2640 - accuracy: 0.9110 - val_loss: 0.3817 - val_accuracy: 0.9106

= model.evaluate(X_test, y_test)= X_test[:10 ] # pretend we have new images = model.predict(X_new)

313/313 [==============================] - 1s 4ms/step - loss: 0.3518 - accuracy: 0.9087

1/1 [==============================] - 0s 270ms/step

([0.35184580087661743, 0.9086999893188477],

array([[0.00000000e+00, 0.00000000e+00, 0.00000000e+00, 0.00000000e+00,

0.00000000e+00, 6.11583033e-34, 0.00000000e+00, 2.93755923e-16,

0.00000000e+00, 1.00000000e+00],

[2.29682566e-08, 0.00000000e+00, 9.95057583e-01, 1.22728333e-17,

1.12017071e-04, 0.00000000e+00, 4.83040046e-03, 0.00000000e+00,

2.82865502e-11, 0.00000000e+00],

[0.00000000e+00, 1.00000000e+00, 0.00000000e+00, 1.07169106e-28,

3.38975873e-33, 0.00000000e+00, 0.00000000e+00, 0.00000000e+00,

0.00000000e+00, 0.00000000e+00],

[0.00000000e+00, 1.00000000e+00, 0.00000000e+00, 5.98663190e-28,

1.62537842e-30, 0.00000000e+00, 0.00000000e+00, 0.00000000e+00,

0.00000000e+00, 0.00000000e+00],

[2.34796666e-02, 0.00000000e+00, 4.40896001e-05, 1.58730646e-08,

1.96278652e-05, 6.24068337e-15, 9.76455688e-01, 1.57429494e-17,

7.90122328e-07, 2.14440993e-14],

[0.00000000e+00, 1.00000000e+00, 0.00000000e+00, 1.17844744e-34,

1.37916159e-38, 0.00000000e+00, 0.00000000e+00, 0.00000000e+00,

0.00000000e+00, 0.00000000e+00],

[1.58039376e-15, 0.00000000e+00, 1.19538361e-03, 3.84299897e-10,

9.98477161e-01, 0.00000000e+00, 3.27471091e-04, 0.00000000e+00,

9.29603522e-18, 0.00000000e+00],

[9.78829462e-07, 0.00000000e+00, 3.22155256e-06, 1.53601600e-12,

8.27561307e-05, 1.05881361e-37, 9.99913096e-01, 0.00000000e+00,

1.61168967e-10, 9.95916054e-31],

[0.00000000e+00, 0.00000000e+00, 0.00000000e+00, 0.00000000e+00,

0.00000000e+00, 1.00000000e+00, 0.00000000e+00, 0.00000000e+00,

0.00000000e+00, 0.00000000e+00],

[0.00000000e+00, 0.00000000e+00, 0.00000000e+00, 0.00000000e+00,

0.00000000e+00, 2.82005428e-27, 0.00000000e+00, 1.00000000e+00,

0.00000000e+00, 1.44178385e-11]], dtype=float32))

This CNN reaches over 90% accuracy on the test set. It’s not the state of the art , but it is pretty good and better than the dense network with similar number of parameters!

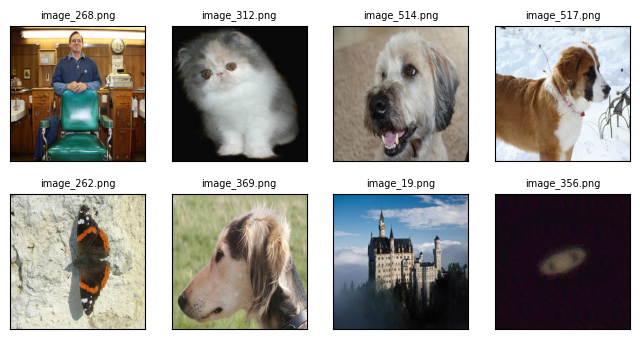

Training a convnet from scratch on a small dataset

Having to train an image-classification model using very little data is a common situation, which you’ll likely encounter in practice if you ever do computer vision in a professional context. A “few” samples can mean anywhere from a few hundred to a few tens of thousands of images. As a practical example, we’ll focus on classifying images as dogs or cats in a dataset containing 5,000 pictures of cats and dogs (2,500 cats, 2,500 dogs). We’ll use 2,000 pictures for training, 1,000 for validation, and 2,000 for testing.

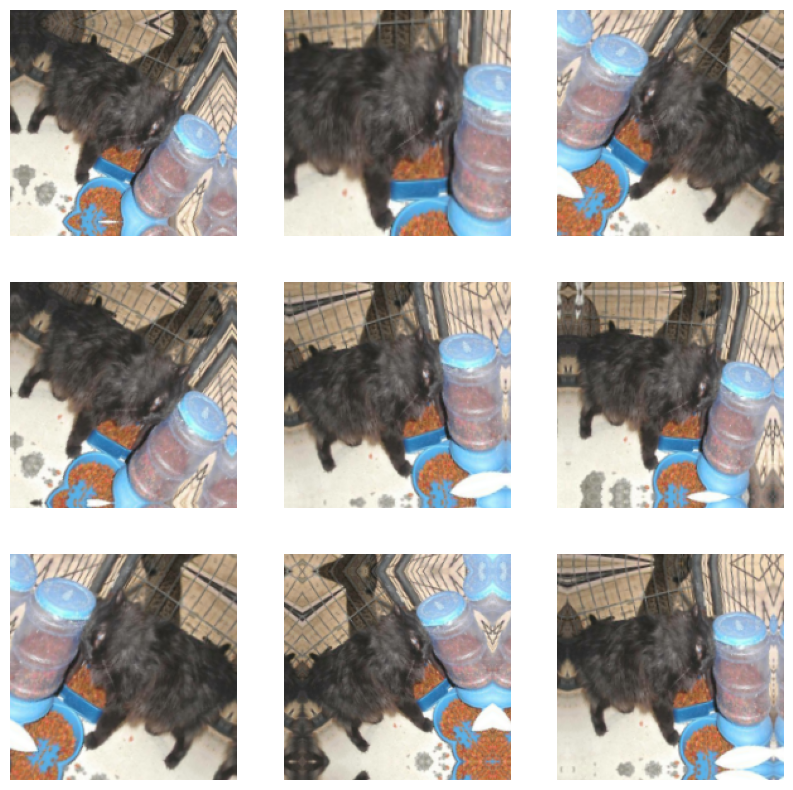

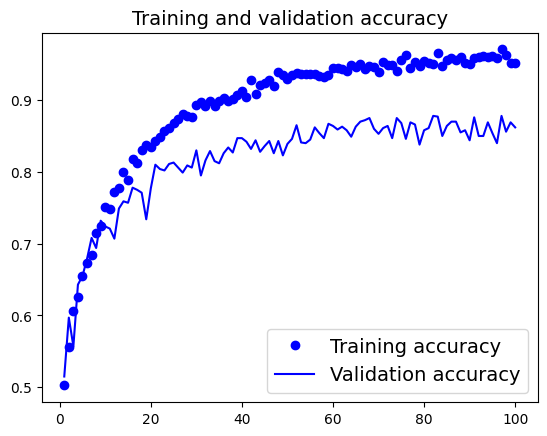

In this section, we’ll review one basic strategy to tackle this problem: training a new model from scratch using what little data you have. We’ll start by naively training a small convnet on the 2,000 training samples, without any regularization, to set a baseline for what can be achieved. This will get us to a classification accuracy of about 70%. At that point, the main issue will be overfitting. Then we’ll introduce data augmentation, a powerful technique for mitigating overfitting in computer vision. By using data augmentation, we’ll improve the model to reach an accuracy of 80–85%.

The relevance of deep learning for small-data problems

What qualifies as “enough samples” to train a model is relative— relative to the size and depth of the model you’re trying to train, for starters. It isn’t possible to train a convnet to solve a complex problem with just a few tens of samples, but a few hundred can potentially suffice if the model is small and well regularized and the task is simple.

Because convnets learn local, translation-invariant features, they’re highly data-efficient on perceptual problems. Training a convnet from scratch on a very small image dataset will yield reasonable results despite a relative lack of data, without the need for any custom feature engineering. You’ll see this in action in this section.

Downloading the data

The Dogs vs. Cats dataset that we will use isn’t packaged with Keras. It was made available by Kaggle as part of a computer vision competition in late 2013, back when convnets weren’t mainstream. You can download the original dataset from www.kaggle.com/c/dogs-vs-cats/data.

But you can also use Kaggle API. First, you need to create a Kaggle API key and download it to your local machine. Just navigate to the Kaggle website in a web browser, log in, and go to the My Account page. In your account settings, you’ll find an API section. Clicking the Create New API Token button will generate a kaggle.json key file and will download it to your machine.

# Upload the API’s key JSON file to your Colab # session by running the following code in a notebook cell: from google.colab import files

Finally, create a ~/.kaggle folder, and copy the key file to it. As a security best practice, you should also make sure that the file is only readable by the current user, yourself:

! mkdir ~/ .kaggle! cp kaggle.json ~/ .kaggle/ ! chmod 600 ~/ .kaggle/ kaggle.json

# You can now download the data we’re about to use: ! kaggle competitions download - c dogs- vs- cats

Downloading dogs-vs-cats.zip to /content

98% 797M/812M [00:04<00:00, 251MB/s]

100% 812M/812M [00:04<00:00, 191MB/s]

The first time you try to download the data, you may get a “403 Forbidden” error. That’s because you need to accept the terms associated with the dataset before you download it—you’ll have to go to www.kaggle.com/c/dogs-vs-cats/rules (while logged into your Kaggle account) and click the I Understand and Accept button. You only need to do this once.

! unzip - qq dogs- vs- cats.zip

The pictures in our dataset are medium-resolution color JPEGs. Unsurprisingly, the original dogs-versus-cats Kaggle competition, all the way back in 2013, was won by entrants who used convnets. The best entries achieved up to 95% accuracy. Even though we will train our models on less than 10% of the data that was available to the competitors, we will still get a resonable well performance.

This dataset contains 25,000 images of dogs and cats (12,500 from each class) and is 543 MB (compressed). After downloading and uncompressing the data, we’ll create a new dataset containing three subsets: a training set with 1,000 samples of each class, a validation set with 500 samples of each class, and a test set with 1,000 samples of each class. Why do this? Because many of the image datasets you’ll encounter in your career only contain a few thousand samples , not tens of thousands. Having more data available would make the problem easier, so it’s good practice to learn with a small dataset.

串流輸出內容已截斷至最後 5000 行。

├── dog.5502.jpg

├── dog.5503.jpg

├── dog.5504.jpg

├── dog.5505.jpg

├── dog.5506.jpg

├── dog.5507.jpg

├── dog.5508.jpg

├── dog.5509.jpg

├── dog.550.jpg

├── dog.5510.jpg

├── dog.5511.jpg

├── dog.5512.jpg

├── dog.5513.jpg

├── dog.5514.jpg

├── dog.5515.jpg

├── dog.5516.jpg

├── dog.5517.jpg

├── dog.5518.jpg

├── dog.5519.jpg

├── dog.551.jpg

├── dog.5520.jpg

├── dog.5521.jpg

├── dog.5522.jpg

├── dog.5523.jpg

├── dog.5524.jpg

├── dog.5525.jpg

├── dog.5526.jpg

├── dog.5527.jpg

├── dog.5528.jpg

├── dog.5529.jpg

├── dog.552.jpg

├── dog.5530.jpg

├── dog.5531.jpg

├── dog.5532.jpg

├── dog.5533.jpg

├── dog.5534.jpg

├── dog.5535.jpg

├── dog.5536.jpg

├── dog.5537.jpg

├── dog.5538.jpg

├── dog.5539.jpg

├── dog.553.jpg

├── dog.5540.jpg

├── dog.5541.jpg

├── dog.5542.jpg

├── dog.5543.jpg

├── dog.5544.jpg

├── dog.5545.jpg

├── dog.5546.jpg

├── dog.5547.jpg

├── dog.5548.jpg

├── dog.5549.jpg

├── dog.554.jpg

├── dog.5550.jpg

├── dog.5551.jpg

├── dog.5552.jpg

├── dog.5553.jpg

├── dog.5554.jpg

├── dog.5555.jpg

├── dog.5556.jpg

├── dog.5557.jpg

├── dog.5558.jpg

├── dog.5559.jpg

├── dog.555.jpg

├── dog.5560.jpg

├── dog.5561.jpg

├── dog.5562.jpg

├── dog.5563.jpg

├── dog.5564.jpg

├── dog.5565.jpg

├── dog.5566.jpg

├── dog.5567.jpg

├── dog.5568.jpg

├── dog.5569.jpg

├── dog.556.jpg

├── dog.5570.jpg

├── dog.5571.jpg

├── dog.5572.jpg

├── dog.5573.jpg

├── dog.5574.jpg

├── dog.5575.jpg

├── dog.5576.jpg

├── dog.5577.jpg

├── dog.5578.jpg

├── dog.5579.jpg

├── dog.557.jpg

├── dog.5580.jpg

├── dog.5581.jpg

├── dog.5582.jpg

├── dog.5583.jpg

├── dog.5584.jpg

├── dog.5585.jpg

├── dog.5586.jpg

├── dog.5587.jpg

├── dog.5588.jpg

├── dog.5589.jpg

├── dog.558.jpg

├── dog.5590.jpg

├── dog.5591.jpg

├── dog.5592.jpg

├── dog.5593.jpg

├── dog.5594.jpg

├── dog.5595.jpg

├── dog.5596.jpg

├── dog.5597.jpg

├── dog.5598.jpg

├── dog.5599.jpg

├── dog.559.jpg

├── dog.55.jpg

├── dog.5600.jpg

├── dog.5601.jpg

├── dog.5602.jpg

├── dog.5603.jpg

├── dog.5604.jpg

├── dog.5605.jpg

├── dog.5606.jpg

├── dog.5607.jpg

├── dog.5608.jpg

├── dog.5609.jpg

├── dog.560.jpg

├── dog.5610.jpg

├── dog.5611.jpg

├── dog.5612.jpg

├── dog.5613.jpg

├── dog.5614.jpg

├── dog.5615.jpg

├── dog.5616.jpg

├── dog.5617.jpg

├── dog.5618.jpg

├── dog.5619.jpg

├── dog.561.jpg

├── dog.5620.jpg

├── dog.5621.jpg

├── dog.5622.jpg

├── dog.5623.jpg

├── dog.5624.jpg

├── dog.5625.jpg

├── dog.5626.jpg

├── dog.5627.jpg

├── dog.5628.jpg

├── dog.5629.jpg

├── dog.562.jpg

├── dog.5630.jpg

├── dog.5631.jpg

├── dog.5632.jpg

├── dog.5633.jpg

├── dog.5634.jpg

├── dog.5635.jpg

├── dog.5636.jpg

├── dog.5637.jpg

├── dog.5638.jpg

├── dog.5639.jpg

├── dog.563.jpg

├── dog.5640.jpg

├── dog.5641.jpg

├── dog.5642.jpg

├── dog.5643.jpg

├── dog.5644.jpg

├── dog.5645.jpg

├── dog.5646.jpg

├── dog.5647.jpg

├── dog.5648.jpg

├── dog.5649.jpg

├── dog.564.jpg

├── dog.5650.jpg

├── dog.5651.jpg

├── dog.5652.jpg

├── dog.5653.jpg

├── dog.5654.jpg

├── dog.5655.jpg

├── dog.5656.jpg

├── dog.5657.jpg

├── dog.5658.jpg

├── dog.5659.jpg

├── dog.565.jpg

├── dog.5660.jpg

├── dog.5661.jpg

├── dog.5662.jpg

├── dog.5663.jpg

├── dog.5664.jpg

├── dog.5665.jpg

├── dog.5666.jpg

├── dog.5667.jpg

├── dog.5668.jpg

├── dog.5669.jpg

├── dog.566.jpg

├── dog.5670.jpg

├── dog.5671.jpg

├── dog.5672.jpg

├── dog.5673.jpg

├── dog.5674.jpg

├── dog.5675.jpg

├── dog.5676.jpg

├── dog.5677.jpg

├── dog.5678.jpg

├── dog.5679.jpg

├── dog.567.jpg

├── dog.5680.jpg

├── dog.5681.jpg

├── dog.5682.jpg

├── dog.5683.jpg

├── dog.5684.jpg

├── dog.5685.jpg

├── dog.5686.jpg

├── dog.5687.jpg

├── dog.5688.jpg

├── dog.5689.jpg

├── dog.568.jpg

├── dog.5690.jpg

├── dog.5691.jpg

├── dog.5692.jpg

├── dog.5693.jpg

├── dog.5694.jpg

├── dog.5695.jpg

├── dog.5696.jpg

├── dog.5697.jpg

├── dog.5698.jpg

├── dog.5699.jpg

├── dog.569.jpg

├── dog.56.jpg

├── dog.5700.jpg

├── dog.5701.jpg

├── dog.5702.jpg

├── dog.5703.jpg

├── dog.5704.jpg

├── dog.5705.jpg

├── dog.5706.jpg

├── dog.5707.jpg

├── dog.5708.jpg

├── dog.5709.jpg

├── dog.570.jpg

├── dog.5710.jpg

├── dog.5711.jpg

├── dog.5712.jpg

├── dog.5713.jpg

├── dog.5714.jpg

├── dog.5715.jpg

├── dog.5716.jpg

├── dog.5717.jpg

├── dog.5718.jpg

├── dog.5719.jpg

├── dog.571.jpg

├── dog.5720.jpg

├── dog.5721.jpg

├── dog.5722.jpg

├── dog.5723.jpg

├── dog.5724.jpg

├── dog.5725.jpg

├── dog.5726.jpg

├── dog.5727.jpg

├── dog.5728.jpg

├── dog.5729.jpg

├── dog.572.jpg

├── dog.5730.jpg

├── dog.5731.jpg

├── dog.5732.jpg

├── dog.5733.jpg

├── dog.5734.jpg

├── dog.5735.jpg

├── dog.5736.jpg

├── dog.5737.jpg

├── dog.5738.jpg

├── dog.5739.jpg

├── dog.573.jpg

├── dog.5740.jpg

├── dog.5741.jpg

├── dog.5742.jpg

├── dog.5743.jpg

├── dog.5744.jpg

├── dog.5745.jpg

├── dog.5746.jpg

├── dog.5747.jpg

├── dog.5748.jpg

├── dog.5749.jpg

├── dog.574.jpg

├── dog.5750.jpg

├── dog.5751.jpg

├── dog.5752.jpg

├── dog.5753.jpg

├── dog.5754.jpg

├── dog.5755.jpg

├── dog.5756.jpg

├── dog.5757.jpg

├── dog.5758.jpg

├── dog.5759.jpg

├── dog.575.jpg

├── dog.5760.jpg

├── dog.5761.jpg

├── dog.5762.jpg

├── dog.5763.jpg

├── dog.5764.jpg

├── dog.5765.jpg

├── dog.5766.jpg

├── dog.5767.jpg

├── dog.5768.jpg

├── dog.5769.jpg

├── dog.576.jpg

├── dog.5770.jpg

├── dog.5771.jpg

├── dog.5772.jpg

├── dog.5773.jpg

├── dog.5774.jpg

├── dog.5775.jpg

├── dog.5776.jpg

├── dog.5777.jpg

├── dog.5778.jpg

├── dog.5779.jpg

├── dog.577.jpg

├── dog.5780.jpg

├── dog.5781.jpg

├── dog.5782.jpg

├── dog.5783.jpg

├── dog.5784.jpg

├── dog.5785.jpg

├── dog.5786.jpg

├── dog.5787.jpg

├── dog.5788.jpg

├── dog.5789.jpg

├── dog.578.jpg

├── dog.5790.jpg

├── dog.5791.jpg

├── dog.5792.jpg

├── dog.5793.jpg

├── dog.5794.jpg

├── dog.5795.jpg

├── dog.5796.jpg

├── dog.5797.jpg

├── dog.5798.jpg

├── dog.5799.jpg

├── dog.579.jpg

├── dog.57.jpg

├── dog.5800.jpg

├── dog.5801.jpg

├── dog.5802.jpg

├── dog.5803.jpg

├── dog.5804.jpg

├── dog.5805.jpg

├── dog.5806.jpg

├── dog.5807.jpg

├── dog.5808.jpg

├── dog.5809.jpg

├── dog.580.jpg

├── dog.5810.jpg

├── dog.5811.jpg

├── dog.5812.jpg

├── dog.5813.jpg

├── dog.5814.jpg

├── dog.5815.jpg

├── dog.5816.jpg

├── dog.5817.jpg

├── dog.5818.jpg

├── dog.5819.jpg

├── dog.581.jpg

├── dog.5820.jpg

├── dog.5821.jpg

├── dog.5822.jpg

├── dog.5823.jpg

├── dog.5824.jpg

├── dog.5825.jpg

├── dog.5826.jpg

├── dog.5827.jpg

├── dog.5828.jpg

├── dog.5829.jpg

├── dog.582.jpg

├── dog.5830.jpg

├── dog.5831.jpg

├── dog.5832.jpg

├── dog.5833.jpg

├── dog.5834.jpg

├── dog.5835.jpg

├── dog.5836.jpg

├── dog.5837.jpg

├── dog.5838.jpg

├── dog.5839.jpg

├── dog.583.jpg

├── dog.5840.jpg

├── dog.5841.jpg

├── dog.5842.jpg

├── dog.5843.jpg

├── dog.5844.jpg

├── dog.5845.jpg

├── dog.5846.jpg

├── dog.5847.jpg

├── dog.5848.jpg

├── dog.5849.jpg

├── dog.584.jpg

├── dog.5850.jpg

├── dog.5851.jpg

├── dog.5852.jpg

├── dog.5853.jpg

├── dog.5854.jpg

├── dog.5855.jpg

├── dog.5856.jpg

├── dog.5857.jpg

├── dog.5858.jpg

├── dog.5859.jpg

├── dog.585.jpg

├── dog.5860.jpg

├── dog.5861.jpg

├── dog.5862.jpg

├── dog.5863.jpg

├── dog.5864.jpg

├── dog.5865.jpg

├── dog.5866.jpg

├── dog.5867.jpg

├── dog.5868.jpg

├── dog.5869.jpg

├── dog.586.jpg

├── dog.5870.jpg

├── dog.5871.jpg

├── dog.5872.jpg

├── dog.5873.jpg

├── dog.5874.jpg

├── dog.5875.jpg

├── dog.5876.jpg

├── dog.5877.jpg

├── dog.5878.jpg

├── dog.5879.jpg

├── dog.587.jpg

├── dog.5880.jpg

├── dog.5881.jpg

├── dog.5882.jpg

├── dog.5883.jpg

├── dog.5884.jpg

├── dog.5885.jpg

├── dog.5886.jpg

├── dog.5887.jpg

├── dog.5888.jpg

├── dog.5889.jpg

├── dog.588.jpg

├── dog.5890.jpg

├── dog.5891.jpg

├── dog.5892.jpg

├── dog.5893.jpg

├── dog.5894.jpg

├── dog.5895.jpg

├── dog.5896.jpg

├── dog.5897.jpg

├── dog.5898.jpg

├── dog.5899.jpg

├── dog.589.jpg

├── dog.58.jpg

├── dog.5900.jpg

├── dog.5901.jpg

├── dog.5902.jpg

├── dog.5903.jpg

├── dog.5904.jpg

├── dog.5905.jpg

├── dog.5906.jpg

├── dog.5907.jpg

├── dog.5908.jpg

├── dog.5909.jpg

├── dog.590.jpg

├── dog.5910.jpg

├── dog.5911.jpg

├── dog.5912.jpg

├── dog.5913.jpg

├── dog.5914.jpg

├── dog.5915.jpg

├── dog.5916.jpg

├── dog.5917.jpg

├── dog.5918.jpg

├── dog.5919.jpg

├── dog.591.jpg

├── dog.5920.jpg

├── dog.5921.jpg

├── dog.5922.jpg

├── dog.5923.jpg

├── dog.5924.jpg

├── dog.5925.jpg

├── dog.5926.jpg

├── dog.5927.jpg

├── dog.5928.jpg

├── dog.5929.jpg

├── dog.592.jpg

├── dog.5930.jpg

├── dog.5931.jpg

├── dog.5932.jpg

├── dog.5933.jpg

├── dog.5934.jpg

├── dog.5935.jpg

├── dog.5936.jpg

├── dog.5937.jpg

├── dog.5938.jpg

├── dog.5939.jpg

├── dog.593.jpg

├── dog.5940.jpg

├── dog.5941.jpg

├── dog.5942.jpg

├── dog.5943.jpg

├── dog.5944.jpg

├── dog.5945.jpg

├── dog.5946.jpg

├── dog.5947.jpg

├── dog.5948.jpg

├── dog.5949.jpg

├── dog.594.jpg

├── dog.5950.jpg

├── dog.5951.jpg

├── dog.5952.jpg

├── dog.5953.jpg

├── dog.5954.jpg

├── dog.5955.jpg

├── dog.5956.jpg

├── dog.5957.jpg

├── dog.5958.jpg

├── dog.5959.jpg

├── dog.595.jpg

├── dog.5960.jpg

├── dog.5961.jpg

├── dog.5962.jpg

├── dog.5963.jpg

├── dog.5964.jpg

├── dog.5965.jpg

├── dog.5966.jpg

├── dog.5967.jpg

├── dog.5968.jpg

├── dog.5969.jpg

├── dog.596.jpg

├── dog.5970.jpg

├── dog.5971.jpg

├── dog.5972.jpg

├── dog.5973.jpg

├── dog.5974.jpg

├── dog.5975.jpg

├── dog.5976.jpg

├── dog.5977.jpg

├── dog.5978.jpg

├── dog.5979.jpg

├── dog.597.jpg

├── dog.5980.jpg

├── dog.5981.jpg

├── dog.5982.jpg

├── dog.5983.jpg

├── dog.5984.jpg

├── dog.5985.jpg

├── dog.5986.jpg

├── dog.5987.jpg

├── dog.5988.jpg

├── dog.5989.jpg

├── dog.598.jpg

├── dog.5990.jpg

├── dog.5991.jpg

├── dog.5992.jpg

├── dog.5993.jpg

├── dog.5994.jpg

├── dog.5995.jpg

├── dog.5996.jpg

├── dog.5997.jpg

├── dog.5998.jpg

├── dog.5999.jpg

├── dog.599.jpg

├── dog.59.jpg

├── dog.5.jpg

├── dog.6000.jpg

├── dog.6001.jpg

├── dog.6002.jpg

├── dog.6003.jpg

├── dog.6004.jpg

├── dog.6005.jpg

├── dog.6006.jpg

├── dog.6007.jpg

├── dog.6008.jpg

├── dog.6009.jpg

├── dog.600.jpg

├── dog.6010.jpg

├── dog.6011.jpg

├── dog.6012.jpg

├── dog.6013.jpg

├── dog.6014.jpg

├── dog.6015.jpg

├── dog.6016.jpg

├── dog.6017.jpg

├── dog.6018.jpg

├── dog.6019.jpg

├── dog.601.jpg

├── dog.6020.jpg

├── dog.6021.jpg

├── dog.6022.jpg

├── dog.6023.jpg

├── dog.6024.jpg

├── dog.6025.jpg

├── dog.6026.jpg

├── dog.6027.jpg

├── dog.6028.jpg

├── dog.6029.jpg

├── dog.602.jpg

├── dog.6030.jpg

├── dog.6031.jpg

├── dog.6032.jpg

├── dog.6033.jpg

├── dog.6034.jpg

├── dog.6035.jpg

├── dog.6036.jpg

├── dog.6037.jpg

├── dog.6038.jpg

├── dog.6039.jpg

├── dog.603.jpg

├── dog.6040.jpg

├── dog.6041.jpg

├── dog.6042.jpg

├── dog.6043.jpg

├── dog.6044.jpg

├── dog.6045.jpg

├── dog.6046.jpg

├── dog.6047.jpg

├── dog.6048.jpg

├── dog.6049.jpg

├── dog.604.jpg

├── dog.6050.jpg

├── dog.6051.jpg

├── dog.6052.jpg

├── dog.6053.jpg

├── dog.6054.jpg

├── dog.6055.jpg

├── dog.6056.jpg

├── dog.6057.jpg

├── dog.6058.jpg

├── dog.6059.jpg

├── dog.605.jpg

├── dog.6060.jpg

├── dog.6061.jpg

├── dog.6062.jpg

├── dog.6063.jpg

├── dog.6064.jpg

├── dog.6065.jpg

├── dog.6066.jpg

├── dog.6067.jpg

├── dog.6068.jpg

├── dog.6069.jpg

├── dog.606.jpg

├── dog.6070.jpg

├── dog.6071.jpg

├── dog.6072.jpg

├── dog.6073.jpg

├── dog.6074.jpg

├── dog.6075.jpg

├── dog.6076.jpg

├── dog.6077.jpg

├── dog.6078.jpg

├── dog.6079.jpg

├── dog.607.jpg

├── dog.6080.jpg

├── dog.6081.jpg

├── dog.6082.jpg

├── dog.6083.jpg

├── dog.6084.jpg

├── dog.6085.jpg

├── dog.6086.jpg

├── dog.6087.jpg

├── dog.6088.jpg

├── dog.6089.jpg

├── dog.608.jpg

├── dog.6090.jpg

├── dog.6091.jpg

├── dog.6092.jpg

├── dog.6093.jpg

├── dog.6094.jpg

├── dog.6095.jpg

├── dog.6096.jpg

├── dog.6097.jpg

├── dog.6098.jpg

├── dog.6099.jpg

├── dog.609.jpg

├── dog.60.jpg

├── dog.6100.jpg

├── dog.6101.jpg

├── dog.6102.jpg

├── dog.6103.jpg

├── dog.6104.jpg

├── dog.6105.jpg

├── dog.6106.jpg

├── dog.6107.jpg

├── dog.6108.jpg

├── dog.6109.jpg

├── dog.610.jpg

├── dog.6110.jpg

├── dog.6111.jpg

├── dog.6112.jpg

├── dog.6113.jpg

├── dog.6114.jpg

├── dog.6115.jpg

├── dog.6116.jpg

├── dog.6117.jpg

├── dog.6118.jpg

├── dog.6119.jpg

├── dog.611.jpg

├── dog.6120.jpg

├── dog.6121.jpg

├── dog.6122.jpg

├── dog.6123.jpg

├── dog.6124.jpg

├── dog.6125.jpg

├── dog.6126.jpg

├── dog.6127.jpg

├── dog.6128.jpg

├── dog.6129.jpg

├── dog.612.jpg

├── dog.6130.jpg

├── dog.6131.jpg

├── dog.6132.jpg

├── dog.6133.jpg

├── dog.6134.jpg

├── dog.6135.jpg

├── dog.6136.jpg

├── dog.6137.jpg

├── dog.6138.jpg

├── dog.6139.jpg

├── dog.613.jpg

├── dog.6140.jpg

├── dog.6141.jpg

├── dog.6142.jpg

├── dog.6143.jpg

├── dog.6144.jpg

├── dog.6145.jpg

├── dog.6146.jpg

├── dog.6147.jpg

├── dog.6148.jpg

├── dog.6149.jpg

├── dog.614.jpg

├── dog.6150.jpg

├── dog.6151.jpg

├── dog.6152.jpg

├── dog.6153.jpg

├── dog.6154.jpg

├── dog.6155.jpg

├── dog.6156.jpg

├── dog.6157.jpg

├── dog.6158.jpg

├── dog.6159.jpg

├── dog.615.jpg

├── dog.6160.jpg

├── dog.6161.jpg

├── dog.6162.jpg

├── dog.6163.jpg

├── dog.6164.jpg

├── dog.6165.jpg

├── dog.6166.jpg

├── dog.6167.jpg

├── dog.6168.jpg

├── dog.6169.jpg

├── dog.616.jpg

├── dog.6170.jpg

├── dog.6171.jpg

├── dog.6172.jpg

├── dog.6173.jpg

├── dog.6174.jpg

├── dog.6175.jpg

├── dog.6176.jpg

├── dog.6177.jpg

├── dog.6178.jpg

├── dog.6179.jpg

├── dog.617.jpg

├── dog.6180.jpg

├── dog.6181.jpg

├── dog.6182.jpg

├── dog.6183.jpg

├── dog.6184.jpg

├── dog.6185.jpg

├── dog.6186.jpg

├── dog.6187.jpg

├── dog.6188.jpg

├── dog.6189.jpg

├── dog.618.jpg

├── dog.6190.jpg

├── dog.6191.jpg

├── dog.6192.jpg

├── dog.6193.jpg

├── dog.6194.jpg

├── dog.6195.jpg

├── dog.6196.jpg

├── dog.6197.jpg

├── dog.6198.jpg

├── dog.6199.jpg

├── dog.619.jpg

├── dog.61.jpg

├── dog.6200.jpg

├── dog.6201.jpg

├── dog.6202.jpg

├── dog.6203.jpg

├── dog.6204.jpg

├── dog.6205.jpg

├── dog.6206.jpg

├── dog.6207.jpg

├── dog.6208.jpg

├── dog.6209.jpg

├── dog.620.jpg

├── dog.6210.jpg

├── dog.6211.jpg

├── dog.6212.jpg

├── dog.6213.jpg

├── dog.6214.jpg

├── dog.6215.jpg

├── dog.6216.jpg

├── dog.6217.jpg

├── dog.6218.jpg

├── dog.6219.jpg

├── dog.621.jpg

├── dog.6220.jpg

├── dog.6221.jpg

├── dog.6222.jpg

├── dog.6223.jpg

├── dog.6224.jpg

├── dog.6225.jpg

├── dog.6226.jpg

├── dog.6227.jpg

├── dog.6228.jpg

├── dog.6229.jpg

├── dog.622.jpg

├── dog.6230.jpg

├── dog.6231.jpg

├── dog.6232.jpg

├── dog.6233.jpg

├── dog.6234.jpg

├── dog.6235.jpg

├── dog.6236.jpg

├── dog.6237.jpg

├── dog.6238.jpg

├── dog.6239.jpg

├── dog.623.jpg

├── dog.6240.jpg

├── dog.6241.jpg

├── dog.6242.jpg

├── dog.6243.jpg

├── dog.6244.jpg

├── dog.6245.jpg

├── dog.6246.jpg

├── dog.6247.jpg

├── dog.6248.jpg

├── dog.6249.jpg

├── dog.624.jpg

├── dog.6250.jpg

├── dog.6251.jpg

├── dog.6252.jpg

├── dog.6253.jpg

├── dog.6254.jpg

├── dog.6255.jpg

├── dog.6256.jpg

├── dog.6257.jpg

├── dog.6258.jpg

├── dog.6259.jpg

├── dog.625.jpg

├── dog.6260.jpg

├── dog.6261.jpg

├── dog.6262.jpg

├── dog.6263.jpg

├── dog.6264.jpg

├── dog.6265.jpg

├── dog.6266.jpg

├── dog.6267.jpg

├── dog.6268.jpg

├── dog.6269.jpg

├── dog.626.jpg

├── dog.6270.jpg

├── dog.6271.jpg

├── dog.6272.jpg

├── dog.6273.jpg

├── dog.6274.jpg

├── dog.6275.jpg

├── dog.6276.jpg

├── dog.6277.jpg

├── dog.6278.jpg

├── dog.6279.jpg

├── dog.627.jpg

├── dog.6280.jpg

├── dog.6281.jpg

├── dog.6282.jpg

├── dog.6283.jpg

├── dog.6284.jpg

├── dog.6285.jpg

├── dog.6286.jpg

├── dog.6287.jpg

├── dog.6288.jpg

├── dog.6289.jpg

├── dog.628.jpg

├── dog.6290.jpg

├── dog.6291.jpg

├── dog.6292.jpg

├── dog.6293.jpg

├── dog.6294.jpg

├── dog.6295.jpg

├── dog.6296.jpg

├── dog.6297.jpg

├── dog.6298.jpg

├── dog.6299.jpg

├── dog.629.jpg

├── dog.62.jpg

├── dog.6300.jpg

├── dog.6301.jpg

├── dog.6302.jpg

├── dog.6303.jpg

├── dog.6304.jpg

├── dog.6305.jpg

├── dog.6306.jpg

├── dog.6307.jpg

├── dog.6308.jpg

├── dog.6309.jpg

├── dog.630.jpg

├── dog.6310.jpg

├── dog.6311.jpg

├── dog.6312.jpg

├── dog.6313.jpg

├── dog.6314.jpg

├── dog.6315.jpg

├── dog.6316.jpg

├── dog.6317.jpg

├── dog.6318.jpg

├── dog.6319.jpg

├── dog.631.jpg

├── dog.6320.jpg

├── dog.6321.jpg

├── dog.6322.jpg

├── dog.6323.jpg

├── dog.6324.jpg

├── dog.6325.jpg

├── dog.6326.jpg

├── dog.6327.jpg

├── dog.6328.jpg

├── dog.6329.jpg

├── dog.632.jpg

├── dog.6330.jpg

├── dog.6331.jpg

├── dog.6332.jpg

├── dog.6333.jpg

├── dog.6334.jpg

├── dog.6335.jpg

├── dog.6336.jpg

├── dog.6337.jpg

├── dog.6338.jpg

├── dog.6339.jpg

├── dog.633.jpg

├── dog.6340.jpg

├── dog.6341.jpg

├── dog.6342.jpg

├── dog.6343.jpg

├── dog.6344.jpg

├── dog.6345.jpg

├── dog.6346.jpg

├── dog.6347.jpg

├── dog.6348.jpg

├── dog.6349.jpg

├── dog.634.jpg

├── dog.6350.jpg

├── dog.6351.jpg

├── dog.6352.jpg

├── dog.6353.jpg

├── dog.6354.jpg

├── dog.6355.jpg

├── dog.6356.jpg

├── dog.6357.jpg

├── dog.6358.jpg

├── dog.6359.jpg

├── dog.635.jpg

├── dog.6360.jpg

├── dog.6361.jpg

├── dog.6362.jpg

├── dog.6363.jpg

├── dog.6364.jpg

├── dog.6365.jpg

├── dog.6366.jpg

├── dog.6367.jpg

├── dog.6368.jpg

├── dog.6369.jpg

├── dog.636.jpg

├── dog.6370.jpg

├── dog.6371.jpg

├── dog.6372.jpg

├── dog.6373.jpg

├── dog.6374.jpg

├── dog.6375.jpg

├── dog.6376.jpg

├── dog.6377.jpg

├── dog.6378.jpg

├── dog.6379.jpg

├── dog.637.jpg

├── dog.6380.jpg

├── dog.6381.jpg

├── dog.6382.jpg

├── dog.6383.jpg

├── dog.6384.jpg

├── dog.6385.jpg

├── dog.6386.jpg

├── dog.6387.jpg

├── dog.6388.jpg

├── dog.6389.jpg

├── dog.638.jpg

├── dog.6390.jpg

├── dog.6391.jpg

├── dog.6392.jpg

├── dog.6393.jpg

├── dog.6394.jpg

├── dog.6395.jpg

├── dog.6396.jpg

├── dog.6397.jpg

├── dog.6398.jpg

├── dog.6399.jpg

├── dog.639.jpg

├── dog.63.jpg

├── dog.6400.jpg

├── dog.6401.jpg

├── dog.6402.jpg

├── dog.6403.jpg

├── dog.6404.jpg

├── dog.6405.jpg

├── dog.6406.jpg

├── dog.6407.jpg

├── dog.6408.jpg

├── dog.6409.jpg

├── dog.640.jpg

├── dog.6410.jpg

├── dog.6411.jpg

├── dog.6412.jpg

├── dog.6413.jpg

├── dog.6414.jpg

├── dog.6415.jpg

├── dog.6416.jpg

├── dog.6417.jpg

├── dog.6418.jpg

├── dog.6419.jpg

├── dog.641.jpg

├── dog.6420.jpg

├── dog.6421.jpg

├── dog.6422.jpg

├── dog.6423.jpg

├── dog.6424.jpg

├── dog.6425.jpg

├── dog.6426.jpg

├── dog.6427.jpg

├── dog.6428.jpg

├── dog.6429.jpg

├── dog.642.jpg

├── dog.6430.jpg

├── dog.6431.jpg

├── dog.6432.jpg

├── dog.6433.jpg

├── dog.6434.jpg

├── dog.6435.jpg

├── dog.6436.jpg

├── dog.6437.jpg

├── dog.6438.jpg

├── dog.6439.jpg

├── dog.643.jpg

├── dog.6440.jpg

├── dog.6441.jpg

├── dog.6442.jpg

├── dog.6443.jpg

├── dog.6444.jpg

├── dog.6445.jpg

├── dog.6446.jpg

├── dog.6447.jpg

├── dog.6448.jpg

├── dog.6449.jpg

├── dog.644.jpg

├── dog.6450.jpg

├── dog.6451.jpg

├── dog.6452.jpg

├── dog.6453.jpg

├── dog.6454.jpg

├── dog.6455.jpg

├── dog.6456.jpg

├── dog.6457.jpg

├── dog.6458.jpg

├── dog.6459.jpg

├── dog.645.jpg

├── dog.6460.jpg

├── dog.6461.jpg

├── dog.6462.jpg

├── dog.6463.jpg

├── dog.6464.jpg

├── dog.6465.jpg

├── dog.6466.jpg

├── dog.6467.jpg

├── dog.6468.jpg

├── dog.6469.jpg

├── dog.646.jpg

├── dog.6470.jpg

├── dog.6471.jpg

├── dog.6472.jpg

├── dog.6473.jpg

├── dog.6474.jpg

├── dog.6475.jpg

├── dog.6476.jpg

├── dog.6477.jpg

├── dog.6478.jpg

├── dog.6479.jpg

├── dog.647.jpg

├── dog.6480.jpg

├── dog.6481.jpg

├── dog.6482.jpg

├── dog.6483.jpg

├── dog.6484.jpg

├── dog.6485.jpg

├── dog.6486.jpg

├── dog.6487.jpg

├── dog.6488.jpg

├── dog.6489.jpg

├── dog.648.jpg

├── dog.6490.jpg

├── dog.6491.jpg

├── dog.6492.jpg

├── dog.6493.jpg

├── dog.6494.jpg

├── dog.6495.jpg

├── dog.6496.jpg

├── dog.6497.jpg

├── dog.6498.jpg

├── dog.6499.jpg

├── dog.649.jpg

├── dog.64.jpg

├── dog.6500.jpg

├── dog.6501.jpg

├── dog.6502.jpg

├── dog.6503.jpg

├── dog.6504.jpg

├── dog.6505.jpg

├── dog.6506.jpg

├── dog.6507.jpg

├── dog.6508.jpg

├── dog.6509.jpg

├── dog.650.jpg

├── dog.6510.jpg

├── dog.6511.jpg

├── dog.6512.jpg

├── dog.6513.jpg

├── dog.6514.jpg

├── dog.6515.jpg

├── dog.6516.jpg

├── dog.6517.jpg

├── dog.6518.jpg

├── dog.6519.jpg

├── dog.651.jpg

├── dog.6520.jpg

├── dog.6521.jpg

├── dog.6522.jpg

├── dog.6523.jpg

├── dog.6524.jpg

├── dog.6525.jpg

├── dog.6526.jpg

├── dog.6527.jpg

├── dog.6528.jpg

├── dog.6529.jpg

├── dog.652.jpg

├── dog.6530.jpg

├── dog.6531.jpg

├── dog.6532.jpg

├── dog.6533.jpg

├── dog.6534.jpg

├── dog.6535.jpg

├── dog.6536.jpg

├── dog.6537.jpg

├── dog.6538.jpg

├── dog.6539.jpg

├── dog.653.jpg

├── dog.6540.jpg

├── dog.6541.jpg

├── dog.6542.jpg

├── dog.6543.jpg

├── dog.6544.jpg

├── dog.6545.jpg

├── dog.6546.jpg

├── dog.6547.jpg

├── dog.6548.jpg

├── dog.6549.jpg

├── dog.654.jpg

├── dog.6550.jpg

├── dog.6551.jpg

├── dog.6552.jpg

├── dog.6553.jpg

├── dog.6554.jpg

├── dog.6555.jpg

├── dog.6556.jpg

├── dog.6557.jpg

├── dog.6558.jpg

├── dog.6559.jpg

├── dog.655.jpg

├── dog.6560.jpg

├── dog.6561.jpg

├── dog.6562.jpg

├── dog.6563.jpg

├── dog.6564.jpg

├── dog.6565.jpg

├── dog.6566.jpg

├── dog.6567.jpg

├── dog.6568.jpg

├── dog.6569.jpg

├── dog.656.jpg

├── dog.6570.jpg

├── dog.6571.jpg

├── dog.6572.jpg

├── dog.6573.jpg

├── dog.6574.jpg

├── dog.6575.jpg

├── dog.6576.jpg

├── dog.6577.jpg

├── dog.6578.jpg

├── dog.6579.jpg

├── dog.657.jpg

├── dog.6580.jpg

├── dog.6581.jpg

├── dog.6582.jpg

├── dog.6583.jpg

├── dog.6584.jpg

├── dog.6585.jpg

├── dog.6586.jpg

├── dog.6587.jpg

├── dog.6588.jpg

├── dog.6589.jpg

├── dog.658.jpg

├── dog.6590.jpg

├── dog.6591.jpg

├── dog.6592.jpg

├── dog.6593.jpg

├── dog.6594.jpg

├── dog.6595.jpg

├── dog.6596.jpg

├── dog.6597.jpg

├── dog.6598.jpg

├── dog.6599.jpg

├── dog.659.jpg

├── dog.65.jpg

├── dog.6600.jpg

├── dog.6601.jpg

├── dog.6602.jpg

├── dog.6603.jpg

├── dog.6604.jpg

├── dog.6605.jpg

├── dog.6606.jpg

├── dog.6607.jpg

├── dog.6608.jpg

├── dog.6609.jpg

├── dog.660.jpg

├── dog.6610.jpg

├── dog.6611.jpg

├── dog.6612.jpg

├── dog.6613.jpg

├── dog.6614.jpg

├── dog.6615.jpg

├── dog.6616.jpg

├── dog.6617.jpg

├── dog.6618.jpg

├── dog.6619.jpg

├── dog.661.jpg

├── dog.6620.jpg

├── dog.6621.jpg

├── dog.6622.jpg

├── dog.6623.jpg

├── dog.6624.jpg

├── dog.6625.jpg

├── dog.6626.jpg

├── dog.6627.jpg

├── dog.6628.jpg

├── dog.6629.jpg

├── dog.662.jpg

├── dog.6630.jpg

├── dog.6631.jpg

├── dog.6632.jpg

├── dog.6633.jpg

├── dog.6634.jpg

├── dog.6635.jpg

├── dog.6636.jpg

├── dog.6637.jpg

├── dog.6638.jpg

├── dog.6639.jpg

├── dog.663.jpg

├── dog.6640.jpg

├── dog.6641.jpg

├── dog.6642.jpg

├── dog.6643.jpg

├── dog.6644.jpg

├── dog.6645.jpg

├── dog.6646.jpg

├── dog.6647.jpg

├── dog.6648.jpg

├── dog.6649.jpg

├── dog.664.jpg

├── dog.6650.jpg

├── dog.6651.jpg

├── dog.6652.jpg

├── dog.6653.jpg

├── dog.6654.jpg

├── dog.6655.jpg

├── dog.6656.jpg

├── dog.6657.jpg

├── dog.6658.jpg

├── dog.6659.jpg

├── dog.665.jpg

├── dog.6660.jpg

├── dog.6661.jpg

├── dog.6662.jpg

├── dog.6663.jpg

├── dog.6664.jpg

├── dog.6665.jpg

├── dog.6666.jpg

├── dog.6667.jpg

├── dog.6668.jpg

├── dog.6669.jpg

├── dog.666.jpg

├── dog.6670.jpg

├── dog.6671.jpg

├── dog.6672.jpg

├── dog.6673.jpg

├── dog.6674.jpg

├── dog.6675.jpg

├── dog.6676.jpg

├── dog.6677.jpg

├── dog.6678.jpg

├── dog.6679.jpg

├── dog.667.jpg

├── dog.6680.jpg

├── dog.6681.jpg

├── dog.6682.jpg

├── dog.6683.jpg

├── dog.6684.jpg

├── dog.6685.jpg

├── dog.6686.jpg

├── dog.6687.jpg

├── dog.6688.jpg

├── dog.6689.jpg

├── dog.668.jpg

├── dog.6690.jpg

├── dog.6691.jpg

├── dog.6692.jpg

├── dog.6693.jpg

├── dog.6694.jpg

├── dog.6695.jpg

├── dog.6696.jpg

├── dog.6697.jpg

├── dog.6698.jpg

├── dog.6699.jpg

├── dog.669.jpg

├── dog.66.jpg

├── dog.6700.jpg

├── dog.6701.jpg

├── dog.6702.jpg

├── dog.6703.jpg

├── dog.6704.jpg

├── dog.6705.jpg

├── dog.6706.jpg

├── dog.6707.jpg

├── dog.6708.jpg

├── dog.6709.jpg

├── dog.670.jpg

├── dog.6710.jpg

├── dog.6711.jpg

├── dog.6712.jpg

├── dog.6713.jpg

├── dog.6714.jpg

├── dog.6715.jpg

├── dog.6716.jpg

├── dog.6717.jpg

├── dog.6718.jpg

├── dog.6719.jpg

├── dog.671.jpg

├── dog.6720.jpg

├── dog.6721.jpg

├── dog.6722.jpg

├── dog.6723.jpg

├── dog.6724.jpg

├── dog.6725.jpg

├── dog.6726.jpg

├── dog.6727.jpg

├── dog.6728.jpg

├── dog.6729.jpg

├── dog.672.jpg

├── dog.6730.jpg

├── dog.6731.jpg

├── dog.6732.jpg

├── dog.6733.jpg

├── dog.6734.jpg

├── dog.6735.jpg

├── dog.6736.jpg

├── dog.6737.jpg

├── dog.6738.jpg

├── dog.6739.jpg

├── dog.673.jpg

├── dog.6740.jpg

├── dog.6741.jpg

├── dog.6742.jpg

├── dog.6743.jpg

├── dog.6744.jpg

├── dog.6745.jpg

├── dog.6746.jpg

├── dog.6747.jpg

├── dog.6748.jpg

├── dog.6749.jpg

├── dog.674.jpg

├── dog.6750.jpg

├── dog.6751.jpg

├── dog.6752.jpg

├── dog.6753.jpg

├── dog.6754.jpg

├── dog.6755.jpg

├── dog.6756.jpg

├── dog.6757.jpg

├── dog.6758.jpg

├── dog.6759.jpg

├── dog.675.jpg

├── dog.6760.jpg

├── dog.6761.jpg

├── dog.6762.jpg

├── dog.6763.jpg

├── dog.6764.jpg

├── dog.6765.jpg

├── dog.6766.jpg

├── dog.6767.jpg

├── dog.6768.jpg

├── dog.6769.jpg

├── dog.676.jpg

├── dog.6770.jpg

├── dog.6771.jpg

├── dog.6772.jpg

├── dog.6773.jpg

├── dog.6774.jpg

├── dog.6775.jpg

├── dog.6776.jpg

├── dog.6777.jpg

├── dog.6778.jpg

├── dog.6779.jpg

├── dog.677.jpg

├── dog.6780.jpg

├── dog.6781.jpg

├── dog.6782.jpg

├── dog.6783.jpg

├── dog.6784.jpg

├── dog.6785.jpg

├── dog.6786.jpg

├── dog.6787.jpg

├── dog.6788.jpg

├── dog.6789.jpg

├── dog.678.jpg

├── dog.6790.jpg

├── dog.6791.jpg

├── dog.6792.jpg

├── dog.6793.jpg

├── dog.6794.jpg

├── dog.6795.jpg

├── dog.6796.jpg

├── dog.6797.jpg

├── dog.6798.jpg

├── dog.6799.jpg

├── dog.679.jpg

├── dog.67.jpg

├── dog.6800.jpg

├── dog.6801.jpg

├── dog.6802.jpg

├── dog.6803.jpg

├── dog.6804.jpg

├── dog.6805.jpg

├── dog.6806.jpg

├── dog.6807.jpg

├── dog.6808.jpg

├── dog.6809.jpg

├── dog.680.jpg

├── dog.6810.jpg

├── dog.6811.jpg

├── dog.6812.jpg

├── dog.6813.jpg

├── dog.6814.jpg

├── dog.6815.jpg

├── dog.6816.jpg

├── dog.6817.jpg

├── dog.6818.jpg

├── dog.6819.jpg

├── dog.681.jpg

├── dog.6820.jpg

├── dog.6821.jpg

├── dog.6822.jpg

├── dog.6823.jpg

├── dog.6824.jpg

├── dog.6825.jpg

├── dog.6826.jpg

├── dog.6827.jpg

├── dog.6828.jpg

├── dog.6829.jpg

├── dog.682.jpg

├── dog.6830.jpg

├── dog.6831.jpg

├── dog.6832.jpg

├── dog.6833.jpg

├── dog.6834.jpg

├── dog.6835.jpg

├── dog.6836.jpg

├── dog.6837.jpg

├── dog.6838.jpg

├── dog.6839.jpg

├── dog.683.jpg

├── dog.6840.jpg

├── dog.6841.jpg

├── dog.6842.jpg

├── dog.6843.jpg

├── dog.6844.jpg

├── dog.6845.jpg

├── dog.6846.jpg

├── dog.6847.jpg

├── dog.6848.jpg

├── dog.6849.jpg

├── dog.684.jpg

├── dog.6850.jpg

├── dog.6851.jpg

├── dog.6852.jpg

├── dog.6853.jpg

├── dog.6854.jpg

├── dog.6855.jpg

├── dog.6856.jpg

├── dog.6857.jpg

├── dog.6858.jpg

├── dog.6859.jpg

├── dog.685.jpg

├── dog.6860.jpg

├── dog.6861.jpg

├── dog.6862.jpg

├── dog.6863.jpg

├── dog.6864.jpg

├── dog.6865.jpg

├── dog.6866.jpg

├── dog.6867.jpg

├── dog.6868.jpg

├── dog.6869.jpg

├── dog.686.jpg

├── dog.6870.jpg

├── dog.6871.jpg

├── dog.6872.jpg

├── dog.6873.jpg

├── dog.6874.jpg

├── dog.6875.jpg

├── dog.6876.jpg

├── dog.6877.jpg

├── dog.6878.jpg

├── dog.6879.jpg

├── dog.687.jpg

├── dog.6880.jpg

├── dog.6881.jpg

├── dog.6882.jpg

├── dog.6883.jpg

├── dog.6884.jpg

├── dog.6885.jpg

├── dog.6886.jpg

├── dog.6887.jpg

├── dog.6888.jpg

├── dog.6889.jpg

├── dog.688.jpg

├── dog.6890.jpg

├── dog.6891.jpg

├── dog.6892.jpg

├── dog.6893.jpg

├── dog.6894.jpg

├── dog.6895.jpg

├── dog.6896.jpg

├── dog.6897.jpg

├── dog.6898.jpg

├── dog.6899.jpg

├── dog.689.jpg

├── dog.68.jpg

├── dog.6900.jpg

├── dog.6901.jpg

├── dog.6902.jpg

├── dog.6903.jpg

├── dog.6904.jpg

├── dog.6905.jpg

├── dog.6906.jpg

├── dog.6907.jpg

├── dog.6908.jpg

├── dog.6909.jpg

├── dog.690.jpg

├── dog.6910.jpg

├── dog.6911.jpg

├── dog.6912.jpg

├── dog.6913.jpg

├── dog.6914.jpg

├── dog.6915.jpg

├── dog.6916.jpg

├── dog.6917.jpg

├── dog.6918.jpg

├── dog.6919.jpg

├── dog.691.jpg

├── dog.6920.jpg

├── dog.6921.jpg

├── dog.6922.jpg

├── dog.6923.jpg

├── dog.6924.jpg

├── dog.6925.jpg

├── dog.6926.jpg

├── dog.6927.jpg

├── dog.6928.jpg

├── dog.6929.jpg

├── dog.692.jpg

├── dog.6930.jpg

├── dog.6931.jpg

├── dog.6932.jpg

├── dog.6933.jpg

├── dog.6934.jpg

├── dog.6935.jpg

├── dog.6936.jpg

├── dog.6937.jpg

├── dog.6938.jpg

├── dog.6939.jpg

├── dog.693.jpg

├── dog.6940.jpg

├── dog.6941.jpg

├── dog.6942.jpg

├── dog.6943.jpg

├── dog.6944.jpg

├── dog.6945.jpg

├── dog.6946.jpg

├── dog.6947.jpg

├── dog.6948.jpg

├── dog.6949.jpg

├── dog.694.jpg

├── dog.6950.jpg

├── dog.6951.jpg

├── dog.6952.jpg

├── dog.6953.jpg

├── dog.6954.jpg

├── dog.6955.jpg

├── dog.6956.jpg

├── dog.6957.jpg

├── dog.6958.jpg

├── dog.6959.jpg

├── dog.695.jpg

├── dog.6960.jpg

├── dog.6961.jpg

├── dog.6962.jpg

├── dog.6963.jpg

├── dog.6964.jpg

├── dog.6965.jpg

├── dog.6966.jpg

├── dog.6967.jpg

├── dog.6968.jpg

├── dog.6969.jpg

├── dog.696.jpg

├── dog.6970.jpg

├── dog.6971.jpg

├── dog.6972.jpg

├── dog.6973.jpg

├── dog.6974.jpg

├── dog.6975.jpg

├── dog.6976.jpg

├── dog.6977.jpg

├── dog.6978.jpg

├── dog.6979.jpg

├── dog.697.jpg

├── dog.6980.jpg

├── dog.6981.jpg

├── dog.6982.jpg

├── dog.6983.jpg

├── dog.6984.jpg

├── dog.6985.jpg

├── dog.6986.jpg

├── dog.6987.jpg

├── dog.6988.jpg

├── dog.6989.jpg

├── dog.698.jpg

├── dog.6990.jpg

├── dog.6991.jpg

├── dog.6992.jpg

├── dog.6993.jpg

├── dog.6994.jpg

├── dog.6995.jpg

├── dog.6996.jpg

├── dog.6997.jpg

├── dog.6998.jpg

├── dog.6999.jpg

├── dog.699.jpg

├── dog.69.jpg

├── dog.6.jpg

├── dog.7000.jpg

├── dog.7001.jpg

├── dog.7002.jpg

├── dog.7003.jpg

├── dog.7004.jpg

├── dog.7005.jpg

├── dog.7006.jpg

├── dog.7007.jpg

├── dog.7008.jpg

├── dog.7009.jpg

├── dog.700.jpg

├── dog.7010.jpg

├── dog.7011.jpg

├── dog.7012.jpg

├── dog.7013.jpg

├── dog.7014.jpg

├── dog.7015.jpg

├── dog.7016.jpg

├── dog.7017.jpg

├── dog.7018.jpg

├── dog.7019.jpg

├── dog.701.jpg

├── dog.7020.jpg

├── dog.7021.jpg

├── dog.7022.jpg

├── dog.7023.jpg

├── dog.7024.jpg

├── dog.7025.jpg

├── dog.7026.jpg

├── dog.7027.jpg

├── dog.7028.jpg

├── dog.7029.jpg

├── dog.702.jpg

├── dog.7030.jpg

├── dog.7031.jpg

├── dog.7032.jpg

├── dog.7033.jpg

├── dog.7034.jpg

├── dog.7035.jpg

├── dog.7036.jpg

├── dog.7037.jpg

├── dog.7038.jpg

├── dog.7039.jpg

├── dog.703.jpg

├── dog.7040.jpg

├── dog.7041.jpg

├── dog.7042.jpg

├── dog.7043.jpg

├── dog.7044.jpg

├── dog.7045.jpg

├── dog.7046.jpg

├── dog.7047.jpg

├── dog.7048.jpg

├── dog.7049.jpg

├── dog.704.jpg

├── dog.7050.jpg

├── dog.7051.jpg

├── dog.7052.jpg

├── dog.7053.jpg

├── dog.7054.jpg

├── dog.7055.jpg

├── dog.7056.jpg

├── dog.7057.jpg

├── dog.7058.jpg

├── dog.7059.jpg

├── dog.705.jpg

├── dog.7060.jpg

├── dog.7061.jpg

├── dog.7062.jpg

├── dog.7063.jpg

├── dog.7064.jpg

├── dog.7065.jpg

├── dog.7066.jpg

├── dog.7067.jpg

├── dog.7068.jpg

├── dog.7069.jpg

├── dog.706.jpg

├── dog.7070.jpg

├── dog.7071.jpg

├── dog.7072.jpg

├── dog.7073.jpg

├── dog.7074.jpg

├── dog.7075.jpg

├── dog.7076.jpg

├── dog.7077.jpg

├── dog.7078.jpg

├── dog.7079.jpg

├── dog.707.jpg

├── dog.7080.jpg

├── dog.7081.jpg

├── dog.7082.jpg

├── dog.7083.jpg

├── dog.7084.jpg

├── dog.7085.jpg

├── dog.7086.jpg

├── dog.7087.jpg